Big Picture AI Safety

May 23, 2024

Summary

We conducted 17 semi-structured interviews of AI safety experts about their big picture strategic view of the AI safety landscape: how will human-level AI play out, how things might go wrong, and what should the AI safety community be doing. While many respondents held “traditional” views (e.g. the main threat is misaligned AI takeover), there was more opposition to these standard views than we expected, and the field seems more split on many important questions than someone outside the field may infer.

What do AI safety experts believe about the big picture of AI risk? How might things go wrong, what we should do about it, and how have we done so far? Does everybody in AI safety agree on the fundamentals? Which views are consensus, which are contested and which are fringe? Maybe we could learn this from the literature (as in the MTAIR project), but many ideas and opinions are not written down anywhere, they exist only in people’s heads and in lunchtime conversations at AI labs and coworking spaces.

I set out to learn what the AI safety community believes about the strategic landscape of AI safety. I conducted 17 semi-structured interviews with a range of AI safety experts. I avoided going into any details of particular technical concepts or philosophical arguments, instead focussing on how such concepts and arguments fit into the big picture of what AI safety is trying to achieve.

This work is similar to the AI Impacts surveys, Vael Gates’ AI Risk Discussions, and Rob Bensinger’s existential risk from AI survey. This is different to those projects in that both my approach to interviews and analysis are more qualitative. Part of the hope for this project was that it can hit on harder-to-quantify concepts that are too ill-defined or intuition-based to fit in the format of previous survey work.

Questions

I asked the participants a standardized list of questions.

- What will happen?

- Q1 Will there be a human-level AI? What is your modal guess of what the first human-level AI (HLAI) will look like? I define HLAI as an AI system that can carry out roughly 100% of economically valuable cognitive tasks more cheaply than a human.

- Q1a What’s your 60% or 90% confidence interval for the date of the first HLAI?

- Q2 Could AI bring about an existential catastrophe? If so, what is the most likely way this could happen?

- Q2a What’s your best guess at the probability of such a catastrophe?

- Q1 Will there be a human-level AI? What is your modal guess of what the first human-level AI (HLAI) will look like? I define HLAI as an AI system that can carry out roughly 100% of economically valuable cognitive tasks more cheaply than a human.

- What should we do?

- Q3 Imagine a world where, absent any effort from the AI safety community, an existential catastrophe happens, but actions taken by the AI safety community prevent such a catastrophe. In this world, what did we do to prevent the catastrophe?

- Q4 What research direction (or other activity) do you think will reduce existential risk the most, and what is its theory of change? Could this backfire in some way?

- What mistakes have been made?

- Q5 Are there any big mistakes the AI safety community has made in the past or are currently making?

These questions changed gradually as the interviews went on (given feedback from participants), and I didn’t always ask the questions exactly as I’ve presented them here. I asked participants to answer from their internal model of the world as much as possible and to avoid deferring to the opinions of others (their inside view so to speak).

Participants

- Adam Gleave is the CEO and co-founder of the alignment research non-profit FAR.AI. (Sept 23)

- Adrià Garriga-Alonso is a research scientist at FAR.AI. (Oct 23)

- Ajeya Cotra leads Open Philantropy’s grantmaking on technical research that could help to clarify and reduce catastrophic risks from advanced AI. (Jan 24)

- Alex Turner is a research scientist at Google DeepMind on the Scalable Alignment team. (Feb 24)

- Ben Cottier is a researcher specializing in key trends and questions that will shape the trajectory and governance of AI at Epoch AI. (Oct 23)

- Daniel Filan is a PhD candidate at the Centre for Human-Compatible AI under Stuart Russell and runs the AXRP podcast. (Feb 24)

- David Krueger is an assistant professor in Machine Learning and Computer Vision at the University of Cambridge. (Feb 24)

- Evan Hubinger is an AI alignment stress-testing researcher at Anthropic. (Feb 24)

- Gillian Hadfield is a Professor of Law & Strategic Management at the University of Toronto and holds a CIFAR AI Chair at the Vector Institute for Artificial Intelligence. (Feb 24)

- Holly Elmore is currently running the US front of the Pause AI Movement and previously worked at Rethink Priorities. (Jan 24)

- Jamie Bernardi co-founded BlueDot Impact and ran the AI Safety Fundamentals community, courses and website. (Oct 23)

- Neel Nanda runs Google DeepMind’s mechanistic interpretability team. (Feb 24)

- Nora Belrose is the head of interpretability research at EleutherAI. (Feb 24)

- Noah Siegel is a senior research engineer at Google DeepMind and a PhD candidate at University College London. (Jan 24)

- Ole Jorgensen is a member of technical staff at the UK Government’s AI Safety Institute (this interview was conducted before he joined). (Mar 23)

- Richard Ngo is an AI governance researcher at OpenAI. (Feb 24)

- Ryan Greenblatt is an AI safety researcher at the AI safety non-profit Redwood Research. (Feb 24)

These interviews were conducted between March 2023 and February 2024, and represent their views at the time.

A very brief summary of what people said

What will happen?

Many respondents expected the first human-level AI (HLAI) to be in the same paradigm as current large language models (LLMs) like GPT-4, probably scaled up (made bigger), with some new tweaks and hacks, and scaffolding like AutoGPT to make it agentic. But a smaller handful of people predicted that larger breakthroughs are required before HLAI. The most common story of how AI could cause an existential disaster was the story of unaligned AI takeover, but some explicitly pushed back on the assumptions behind the takeover story. Some took a more structural view of AI risk, emphasizing threats like instability, extreme inequality, gradual human disempowerment, and a collapse of human institutions.

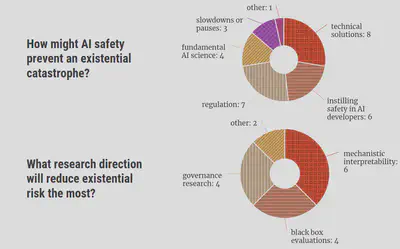

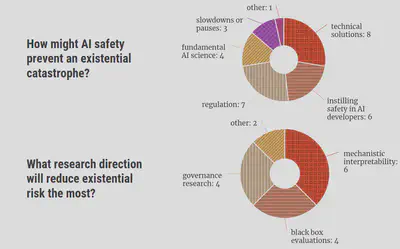

What should we do about it?

When asked how AI safety might prevent disaster, respondents focussed most on

- the technical solutions we might come up with,

- spreading a safety mindset through AI research,

- promoting sensible AI regulation,

- and helping build a fundamental science of AI.

The research directions people were most excited about were mechanistic interpretability, black box evaluations, and governance research.

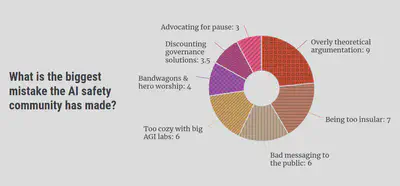

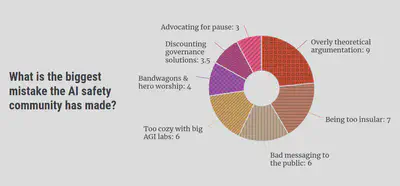

What mistakes have been made?

Participants pointed to a range of mistakes they thought the AI safety movement had made. There was no consensus and the focus was quite different from person to person. The most common themes included:

- an overreliance on overly theoretical argumentation,

- being too insular,

- putting people off by pushing weird or extreme views,

- supporting the leading AGI companies resulting in race dynamics,

- not enough independent thought,

- advocating for an unhelpful pause to AI development,

- and historically ignoring policy as a potential route to safety.

Limitations

- People had somewhat different interpretations of my questions, so they were often answering questions that were subtly different from each other.

- The sample of people I interviewed is not necessarily a representative sample of the AI safety movement as a whole. The sample was pseudo-randomly selected, optimizing for a) diversity of opinion, b) diversity of background, c) seniority, and d) who I could easily track down. Noticeably, there is an absence of individuals from MIRI, a historically influential AI safety organization, or those who subscribe to similar views. I approached some MIRI team members but no one was available for an interview. This is especially problematic since many respondents criticized MIRI for various reasons, and I didn’t get much of a chance to integrate MIRI’s side of the story into the project.

- There will also be a selection bias due to everyone I asked being at least somewhat bought into the idea of AI being an existential risk.

- A handful of respondents disagreed with the goal of this project: they thought that those in AI safety typically spend too much time thinking about theories of impact.

- There were likely a whole bunch of framing effects that I did not control for.

- There was in some cases a large gap in time between the interview and this being written up (mostly between 1 and 4 months, a year for one early interview). Participant opinions may have changed over this period.

How to read this post

This is not a scientific analysis of a systematic survey of a representative sample of individuals, but my qualitative interpretation of responses from a loose collection of semi-structured interviews. Take everything here appropriately lightly.

Results are often reported in the form “N respondents held view X”. This does not imply that “17-N respondents disagree with view X”, since not all topics, themes and potential views were addressed in every interview. What “N respondents held view X” tells us is that at least N respondents hold X, and consider the theme of X important enough to bring up.

Structure of this post

Here I present a condensed summary of my findings, describing the main themes that came up for each question, split into three sections:

- What will happen? What will human-level AI look like, and how might things go wrong?

- What should we do? What should AI safety be trying to achieve and how?

- What mistakes has the AI safety movement made?

You don’t need to have read an earlier post to understand a later one, so feel free to zoom straight in on what interests you.

I am very grateful to all of the participants for offering their time to this project. Also thanks to Vael Gates, Siao Si Looi, ChengCheng Tan, Adam Gleave, Quintin Davis, George Anadiotis, Leo Richter, McKenna Fitzgerald, Charlie Griffin and many of the participants for feedback on early drafts.

1: What will the first human-level AI look like, and how might things go wrong?

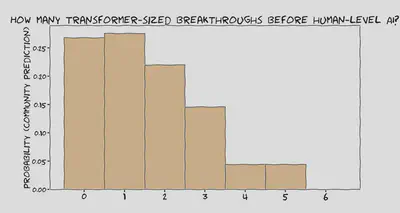

Many respondents expected the first human-level AI to be in the same paradigm as current large language models (LLMs), probably scaled up, with some new tweaks and hacks, and scaffolding to make it agentic. But a different handful of people predicted that reasonably large breakthroughs are required before HLAI, and gave some interesting arguments as to why. We also talked about what those breakthroughs will be, the speed of the transition, and the range of skills such a system might have.

The most common story of how AI could cause an existential disaster was the story of unaligned AI takeover, but some explicitly pushed back on the assumptions behind the takeover story. Misuse also came up a number of times. Some took a more structural view of AI risk, emphasizing threats like instability, extreme inequality, gradual disempowerment, and a collapse of human institutions.

What will the first human-level AI look like?

Q1: What is your modal guess of what the first human-level AI (HLAI) will look like? I define human-level AI as an AI system that can carry out roughly 100% of economically valuable cognitive tasks more cheaply than a human.

There were a number of possible ways I could ask roughly the same question: I could have defined human-level AI differently, or instead asked about “artificial general intelligence” or “transformative AI”, “superintelligence” or the “first AI that poses an existential risk”.

Participants would often say something like “this is a dumb definition, I prefer definition x”, or “the more interesting question is y”, and then go on to talk about x or y. In the answers I report below, you can assume by default that they’re talking about roughly “human-level AI” as I defined above, and I’ll mention when they’re pointing to something substantially different.

Will HLAI be a scaled-up LLM (with tweaks)?

7 people said roughly “yes”1

7 respondents gave answers roughly implying that the first HLAI will not be radically different from today’s transformer-based LLMs like GPT-4.2 It’ll almost certainly need, at minimum, some tweaks to the architecture and training process, better reinforcement learning techniques, and scaffolding to give it more power to make and execute plans.

2 of those 7 thought we should focus on the possibility of HLAI coming from the current paradigm regardless of how likely it is. This is because we can currently study LLMs to understand how things might go wrong, but we can’t study an AI system from some future paradigm or predict how to prepare for one. Even if a particular end is statistically more likely (like heart disease) it’s worth concentrating on the dangers you can see (like a truck careening in your direction).3

4 people said roughly “no”

4 respondents leaned towards HLAI being quite different to the current state-of-the-art.

Adam Gleave pointed out that we can’t simply continue scaling up current LLMs indefinitely until we hit HLAI because we’re going to eventually run out of training data. Maybe there will be enough data to get us to HLAI, but maybe not. If not, we will require a different kind of system that learns more efficiently.

Daniel Filan pointed out that not so long ago, many people thought that the first generally intelligent system would look more like AlphaGo, since that was the breakthrough that everyone was excited about at the time. Now that language models are all-the-rage, everyone is expecting language models to scale all the way to general intelligence. Maybe we’re making the same mistake? AlphaGo and LLMs have a number of parallels (e.g. both include a supervised foundation with reinforcement learning on top), but they are overall qualitatively different.

“I’m inclined to think that when we get AGI, its relation to the smart language models is going to be similar to the relation of smart language models to AlphaGo.” - Daniel Filan

Daniel also offered a thought experiment to illustrate that even human-level LLMs might not be flexible enough to be transformative. Imagine Google had access to human-level LLMs, which is kind of like being able to hire an infinite number of graduates. Could you automate all of Google with this infinite pool of graduates? Probably not. You would quickly run out of supervisors to supervise the graduates. And LLMs can’t build phones or maintain servers. Humans will still be necessary.

Adam highlighted a key uncertainty in answering whether LLMs will scale up to HLAI: can training on short-horizon tasks generalize to long-horizon tasks? We train today’s LLMs to solve short tasks like solving a textbook math problem. Can the skill of solving such short tasks be bootstrapped to longer tasks like writing the math textbook? If so, perhaps LLMs can eventually achieve human-level at any task.

How might HLAI look different to LLMs?

Ryan Greenblatt reckoned that, to be general and transformative, models may require reasoning beyond natural language reasoning. When a human thinks through a problem, their thought process involves a combination of linguistic reasoning (“if I do x then y will happen”) and more abstract non-linguistic reasoning (involving intuitions, emotions, visual thinking and the like). But serial LLM reasoning is mostly limited to chains of thought built from language. Models will likely require a deeper recurrent architecture to store and manipulate more abstract non-linguistic tokens.

David Krueger speculated that, while transformer-like models may constitute the plurality of an HLAI system or its building blocks, the first HLAI will likely involve many other components yet to be invented.

“Instead of one big neural net there might be a bunch of different neural nets that talk to each other – sometimes they’re operating as one big neural net. Think about mixture-of-experts but way more in that direction. […] Sometimes when people explore ideas like this mixture-of-experts they don’t pan out because they’re too fiddly to get working, they require a researcher to spend time tuning and tweaking them, thinking about the particular problem and the issues that come up. I think we can automate all of that and that’ll mean these sorts of ideas that are a little bit too complicated to get used much in practice will become real candidates for practical use.” - David Krueger

Will HLAI at least be a neural network?

Could HLAI require something even more different, like something beyond deep learning? 3 of the 4 respondents who discussed this question predicted that HLAI will most likely be made of neural networks of one kind or another.

“Deep learning is not just a phase. I think that deep learning works in part because it has actually distilled some of the major insights that the brain has.” - Nora Belrose

Adrià Garriga-Alonso pointed out that deep learning has been delivering all the breakthroughs since 2010, and there’s no reason to expect that to change before HLAI.

David was less sure about the place neural networks will have in HLAI. He predicted a 60-80% chance that we will build HLAI primarily from deep learning, but doesn’t find the alternative implausible:

“Deep learning is the most important part of it. But it might not be even close to the whole story.” - David Krueger

How fast will the transition be?

Some have speculated that, once we build an AI that can perform AI research (or at least automate it to a large degree), AI progress will become extremely fast, catapulting us to HLAI and superintelligence within a matter of months, days or even hours. This is sometimes called a “hard takeoff”.

4 respondents see a hard takeoff as likely (at varying degrees of hardness), and 1 finds it unlikely. Ajeya Cotra, David and Evan all emphasized the point in time when AI systems become able to do AI research as a “critical threshold”.

“Right now we’re seriously bottlenecked by human bandwidth, which is very limited. We make a very small number of decisions within a day. I think if humans were sped up by a factor of a million or something, we could optimize our architectures much more, just by thinking more intelligently about how to do things like sparsity and stuff.” - David Krueger

David finds it highly plausible that it takes less than 1 month to transition between “the status quo is being preserved, although we may have tons of very smart AI running around making disruptive-but-not-revolutionary changes to society” and “superhuman AI systems running amok”; this could happen because of recursive self-improvement, or other reasons, such as geopolitical tensions leading to the abandonment of safeguards, or systems rapidly gaining access to more resources such as compute, data, or physical systems such as robots. Ajeya expected the transition to be between several months and a couple of years.

What will transformative AI be good at?

As many participants brought up, my definition of human-level AI is simplistic. AI doesn’t get better at each kind of task at the same rate, and current AI systems are superhuman at some things and subhuman at others. AlphaZero is lightyears ahead of any human at Go, but that approach cannot solve tasks that are not zero-sum procedurally defined games. So my stupid definition prompted some interesting discussion about the rate of improvement of AI at different kinds of tasks.

Daniel expects AI to become very superhuman at most relevant tasks but still struggle with some edge cases for a long time. Ryan finds it plausible (around 40%) that the first AI systems to automate the majority of human labor will appear much stupider than humans in some ways and much smarter in other ways:

“It’s plausible that the first transformatively useful AIs aren’t qualitatively human level but are able to do all the cognitive tasks as well as a human using routes that are very different from humans. You can have systems that are qualitatively much dumber than humans but which are able to automate massive fractions of work via various mechanisms.” - Ryan Greenblatt

Richard Ngo emphasized the time horizon of a task as a key factor in the difficulty of a task for AI. Current LLMs can solve a 5-minute math puzzle but are nowhere near able to write a math textbook. By the time AI can do tasks as long as a human can, it will be obscenely good at short-term tasks.

“Current AI is wildly good at a bunch of stuff on short horizons and then just gets worse and worse for longer horizons. I think if you just extrapolate that, then when we get the first human-level system (by your definition) we’ll be like: okay, great – we finally managed to get it to run autonomously for a month, but before that point it would have already published a bunch of theoretical physics papers.” - Richard Ngo

Richard goes into more detail about time horizons in this post.

Human-level AI when?

“The field of AI has existed for 80 years or something, depending on when you want to start counting. Are we halfway there? It feels like we might be. Especially if we just increase inputs a ton in the future. It would be pretty weird if we were more than a hundred years away. Could we get it in the next ten years? Yeah, I think that’s possible. I don’t know, I could try to put numbers on that, but you’re not gonna get tons more info from the numbers than just from that.” - Daniel Filan

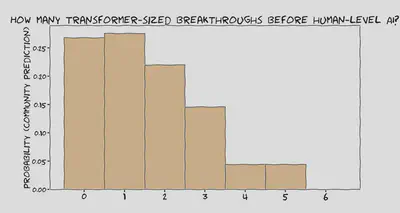

I received a number of estimates about the date of the first human-level AI, at varying degrees of confidence, in the form of medians and confidence intervals. There exist larger-N aggregates of this kind of prediction: for example the AI impacts survey (N=1714, median=2047), this metaculus question (N=154, median=2031) and manifold market (N=313, median=2032).4 But I’ll show you what I learned here anyway to give you some context about the background assumptions of my sample of respondents, as well as some extra information on AI safety expert’s opinions.

How could AI bring about an existential catastrophe?

Q2: Could AI bring about an existential catastrophe? If so, what is the most likely way this could happen?

For a more rigorous N=135 survey of basically this question from a couple of years ago, see here, and for a comprehensive literature review see here. For a summary of my qualitative discussions instead, read on.

The phrase “existential catastrophe” contains a lot of ambiguity. Most commonly the respondents interpreted this to be Toby Ord’s definition: An existential catastrophe is the destruction of humanity’s long-term potential. This does not necessarily involve humans going extinct and doesn’t require any dramatic single event like a sudden AI coup. Some respondents talked about takeover, others talked about permanent damage to society.

The sources of risk

“We’re really bad at solving global coordination problems and that’s the fundamental underlying issue here. I like to draw analogies with climate change and say, hey - look at that - we’ve had scientific consensus there for something like 40 or 50 years and we’re still not taking effective coordinated action. We don’t even understand what it means or have any agreements about how to aggregate preferences or values, there’s a lot of potential for various factors to corrupt preference elicitation processes, and preference falsification seems to run rampant in the world. When you run this forward, at some point, out pops something that is basically an out-of-control replicator that is reasonably approximated by the conventional view of a superintelligence.” - David Krueger

What kinds of AI systems should we be most worried about? 2 respondents emphasized that the only AI systems we need to worry about are those with a sufficient amount of agency. An LLM by itself is not particularly scary, since it doesn’t have any long-term goals, and most of the stories of how things go wrong require such long-term goals.

One source of disagreement was whether the risk mainly came from proprietary models of big AI companies (the descendants of ChatGPT, Claude or Gemini) or open-source models.

“It’s an open question whether or not a reasonably careful AI company is enough to prevent a takeover from happening” - Adam Gleave

4 respondents emphasized the role of recklessness or a lack of care in the development of proprietary models in their extinction scenarios.

One respondent was instead more worried about the misuse of open-source models as an existential threat. There’s currently a big debate about whether open-sourcing the cutting edge of AI is good or bad.

Takeover by misaligned AI

Unsurprisingly, the most common vignette theme was that of takeover by a misaligned AI system (see for example here or here). 7 respondents bought into this story to some degree, while 2 explicitly disagreed with it. As the story usually goes: someone builds an agentic AI system that is highly capable of getting things done. Its goals are not totally aligned with its operator. Maybe it pretends to be aligned to make sure we don’t modify it. Because of instrumental convergence, it reasons that it can achieve its goals better if it seizes control of the world.

Adam addressed a common objection that an AI system by itself couldn’t possibly take control of the world:

“If you think “so what, it’s just a brain in a vat, what happens next?” It seems like the world is sufficiently vulnerable that it’s not that hard for even an Adam-level AI system that can make copies of itself and run fairly cheaply to pose a serious risk to humanity. Imagine what a thousand copies of yourself, working constantly, could do. That’s bigger than most academic departments. The team behind stuxnet probably had no more than 100 people. You could at the very least do a significant amount of damage.

We’ve seen single humans come close to taking over entire continents in the past, so I don’t find it very far-fetched that a very smart AI system, with many copies of itself, could do the same, even without superintelligence.”

- Adam Gleave

Will the transition to HLAI result in a unipolar (a single AI agent with control of the world) or multipolar (many AI agents) world? I talked to 2 respondents about this, and both expected a unipolar scenario to be more likely.

Nora Belrose anticipated that if such a takeover were to happen, the AI that takes over wouldn’t be some commercial model like ChatGPT but a military AI, since such an AI would already have access to military power. You don’t need to imagine the extra steps of an AI seizing power from the ground up.

“I say Terminator and Skynet specifically because I’m being literal about it. I literally mean the Skynet scenario where it’s a military AI.” - Nora Belrose

Objections to the takeover scenario

2 respondents explicitly pushed against the takeover scenario. Alex Turner argued that a lot of the assumptions behind the misaligned takeover scenario no longer hold, given the way AI is currently going. Namely, AI systems have not turned out to be “literal genies” who always misinterpret the intent of your requests.

“LLMs seem pretty good at being reasonable. A way the world could have been, which would have updated me away from this, is if you can’t just be like ‘write me a shell script that prints out a cute message every time I log in’. You would have to be like: I’m using this operating system, you really need to be using bash, I don’t want vsh, I don’t want fish. And this should be low memory, you shouldn’t add a lot of extra stuff. Make sure it’s just a couple of lines, but don’t count new lines. Imagine if it was like this. It’s totally not like this. You can just say a couple of words and it’ll do the thing you had in mind usually.” - Alex Turner

Alex does consider an AI takeover possible, but not because of misaligned intent. If an AI takes over, it will be because a human asked it to.

“If North Korea really wanted to kill a lot of people and somehow they got their hands on this really sophisticated AI, maybe they’d be like, okay, kill everyone in the United States, don’t make it obvious that it’s on our behalf. Maybe this could happen. But I don’t think it would spontaneously build order proteins that would self-assemble into nanofactories or whatever. That’s just a really weird kind of plan” - Alex Turner

Other disaster scenarios

Going the way of the apes

An existential catastrophe, by Toby Ord’s definition, doesn’t necessarily require all humans to die out, it just requires AI to curtail most of the value in the future (by our human lights). Daniel offered a vignette of humans going the way of the apes:

“Let’s say the AIs have an economy that minimally relies on human inputs. They’re making the factories that make the factories and make the chips. They’re able to just run the world themselves. They do so in a way that’s roughly compatible with humans but not quite. At some point, it stops making sense to have humans run the show. I think my best guess for what happens then is like: the humans are just in a wildlife preserve type thing. We get Australia. And we’re just not allowed to fuck anything up outside of Australia.” - Daniel Filan

Extreme inequality

While Nora considered an AI takeover possible (around a 1% chance), she was much more concerned about the potential centralization of wealth and power caused by transformative AI. Such inequality could become locked in, which could curtail humanity’s long-term potential, or be a “fate worse than death” for the world. Nora gave this around a 5% chance of happening.

“Currently most humans have the ability to contribute something to the economy through their labor, this puts some floor on how poor the average person can get. But if humans are Pareto-dominated by AI it’s less clear that there’s a floor on how poor the average human can get.” - Nora Belrose

To Nora, a world where everyone can have their own AI system, rather than elites controlling AI, is better because it empowers everyone to gain from the AGI revolution. For this reason, Nora is broadly pro the development of open-source AI.

Nora conceded that AI will likely cause a big surplus of economic wealth, and there’s some chance this prevents the poorest from becoming arbitrarily poor. Whether or not the poorest in society are allowed the fruits of superintelligence will come down to politics.

A breakdown of trust

Gillian Hadfield viewed AI safety from a different angle: she is interested in the issue of normative competence. Roughly, will AI systems be trustworthy members of society? Will they be able to learn the rules of society and follow them? If AI systems are not normatively competent, this could cause a collapse of the economy which is hard or even impossible to recover from.

Her story goes like this. We deploy AIs broadly, and they become embedded in our human systems, like banking, law, and so on. But these AIs do not have normative competence: we cannot trust them to follow social and legal rules. This breaks our trust in these systems. And since these systems are built on trust, the systems themselves break down.

“It’s a bit like bank runs. If I lose confidence that an institution is going to be stable then I run to take my money out. In the developed world we take for granted high levels of trust. You can leave your car parked on the street. You can send your kids to school and you can eat whatever they’re serving in the restaurant. It may not take too much to break those systems.” - Gillian Hadfield

Such a breakdown of institutions could lead to a collapse of our economy. Gillian painted a picture of a world where humans opt out of interacting with the rest of the world. They stay at home and grow their own crops because they don’t feel safe to interact with the rest of the world.

Gillian argued that this will be hard to recover from. A big reason that today’s developing countries are still relatively poor is a lack of trust in institutions. It’s hard to get a loan to start a business because banks don’t trust that you’ll pay them back. And there’s no recipe for building trust, otherwise, the Middle East wouldn’t be in the mess it’s in now.

A vague sense of unease

Many respondents expressed high uncertainty about the future. I often had to push people to say anything concrete – I often found myself saying “ok, can you at least give me some plausible-sounding vignette?”

4 respondents leaned particularly strongly towards uncertainty and a sense that whatever happens with AI, it will be some complicated chain of events that we can’t capture in a simple story like I’m trying to do here. Jamie, for example, said that he was following a heuristic that AI could be destabilizing for the world, so regardless of what a prospective catastrophe looks like, we should approach with caution. Alex predicted some complicated combination of shifts in capital and wealth, job displacement, the commodification of cognition, and a gradual loss of human control and autonomy. Richard reckoned the line between misalignment and misuse will become blurred. Holly Elmore wasn’t so interested in what concrete story is most likely to play out, but rather focussed on a lack of reassuring stories:

“If I don’t know how it’s impossible for AI to cause problems then I’m just going to assume that they’re possible, and that is unacceptable.” - Holly Elmore

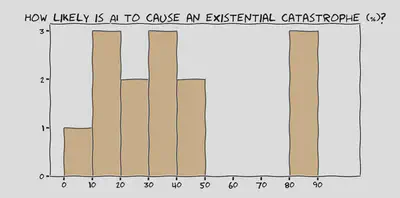

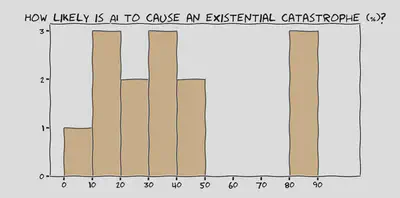

The probability of an existential disaster due to AI

I talked with some of the respondents about how likely they find an existential disaster due to AI. Lots of people had low confidence in their estimates, and many complained that this is not a helpful question to ask. Someone could spend a whole career trying to estimate the probability of disaster until they have a precise and robust percentage, but it won’t help us solve the problem. The important thing is that it’s not zero!

For a larger-N treatment of roughly this question, see the AI impacts survey: 2704 machine learning researchers put a median of 5% chance of HLAI being “extremely bad (e.g. human extinction)”.

2: What should AI safety be trying to achieve?

When asked how AI safety might prevent disaster, respondents focussed most on 1) the technical solutions we might come up with, 2) spreading a safety mindset through AI research, 3) promoting sensible AI regulation, and 4) building a fundamental science of AI. The research directions people were most excited about were mechanistic interpretability, black box evaluations, and governance research.

How could AI safety prevent catastrophe?

Q3 Imagine a world where, absent any effort from the AI safety community, an existential catastrophe happens, but actions taken by the AI safety community prevent such a catastrophe. In this world, what did we do to prevent the catastrophe?

Technical solutions

8 respondents considered the development of technical solutions to be important. 5 of those 8 focussed on the development of thorough safety tests for frontier models (like red-teaming, safety evaluations, and mechanistic interpretability). Such safety tests would be useful both for the voluntary testing of models by AI developers or for enforcing regulation. 4 of the 8 also emphasized the development of scalable oversight techniques.

One respondent hypothesized that if the first five or so AGI systems are sufficiently aligned, then we may be safe from an AI takeover scenario, since the aligned AGIs can hopefully prevent a sixth unaligned AGI from seizing power. Daniel however was skeptical of this.

Sounding the alarm to the AI community

6 respondents emphasized the role of AI safety in spreading a safety mindset and safety tools among AI developers.

3 of those 7 focussed on spreading a safety culture. The default is for safety to be largely ignored when a new technology is being developed:

“They’ll just analogize AI with other technologies, right? Early planes crashed and there was damage, but it was worth it because this technology is going to be so enormously transformative. So there are warning shots that are ignored.” - Noah Siegel

AI is different from these other technologies because we can’t approach AI with the same trial-and-error attitude – an error in the first AGI could cause a global disaster. AI should have a culture similar to that around building nuclear reactors: one with a process for deciding whether a new model is safe to deploy.

So how does one argue that we need more safety standards in AI? 2 respondents emphasized demonstrating the capabilities of models, the speed of capabilities progress, and working out how to predict dangerous capabilities in the future.

“Many doom stories start with people underestimating what the model can do.

Hopefully they don’t discover GPT-7 to be dangerous by testing it directly, but instead they do tests that show the trend line from GPT-4 is headed toward danger at GPT-7. And they have time to implement measures, share information with the government, share information with other developers and try and figure out how to navigate that. And hopefully they’ve already written down what they would do if they got to that point, which might be: ‘we’re going to improve our security up to X point, we’re going to inform ABC people in the government’, and so on.” - Ajeya Cotra

AI safety could also make AI development safer by developing better tools for testing the safety of these systems. As Jamie Bernardi put it: the AI takeover stories inform a particular flavor of AI testing that would not have been included in safety standards otherwise. Adam Gleave sees the value of AI safety to come from “continual horizon scanning and noticing problems that others are missing because the empirical evidence isn’t staring them in the face”.

AI Regulation

7 respondents emphasised getting policy passed to regulate the development of AI systems, although 2 others explicitly said that they were not enthusiastic about regulation.

The most common flavor of regulation suggested was those to ensure new AI models must undergo safety testing before we allow them to be deployed. This means a new dangerous model that otherwise would have gone on to cause damage (e.g. one that is deceptively aligned or power-seeking) may be “caught” by testing before it is deployed. This would not only prevent disaster but serve as a wake-up call about the dangers of AI and supply a testbed for developing safer systems.

Holly Elmore was also a fan of the idea of emergency powers for governments: if it looks like an AI-related emergency is happening (like a rogue AI attempting to seize power), it would be good if governments could order the model to be isolated by shutting down whatever data centers are required for the model to be publicly accessible (this would also require systems to have the relevant kill-switches in compliance with regulation).

How do we get policy passed? Holly believes our best bet is public outreach. Educate the public of the risks, so the public can put pressure on governments to do the right thing. But what if, through our messaging, AI safety becomes a partisan issue, making it hard to pass policies? Holly acknowledged this risk but thought it doesn’t outweigh the benefits of going mainstream. She offered a good way of framing AI safety that seems less likely to have a polarizing effect:

“There are a small number of companies trying to expose the whole world to an existential risk, from which they would highly disproportionately benefit if their plan succeeded. It’s really not like “tech people against the world” or “business people against the world”. It’s just the AGI companies versus everyone else.” - Holly Elmore

Holly argued that many in AI safety have too much of an “elite disruptor mindset”, thinking they’ll be able to play enough 4D chess and make enough back-room deals to push the development of AI in the right direction independently of government or the public. But when you play 4D chess, something usually goes wrong. She gave the example of the role AI safety played in the founding of OpenAI and Anthropic: the idea was that these entities will build AI in a safe way voluntarily, but who knows if that’s actually going to happen. The more robust approach is to educate the public about the risks involved with AI, so society can collectively solve the problem through policy.

Fundamental science

“If you have something you think is a big deal then you want to do science about it full stop. You want to study anything that you think is important. And in this case, it’s that AI values are likely to be wrong. Therefore, you should study AI values, but you should do so in a way that’s pretty fundamental and universal.” - Richard Ngo

“Things that we do that affect the world’s understanding of what to do are more important than trying to do a lot of stuff behind the scenes. And in fact, I think a lot of the behind the scenes stuff has been net negative” - Holly Elmore

4 respondents believed that anything that improves our (society’s) understanding of the problem is robustly helpful. For example, when I asked Richard for ways AI safety can help the situation, he focussed on starting good institutions to do good science in AI safety and governance. When I asked him for a theory of change for this, he responded:

“I can make up answers to this, but I mostly try not to, because it’s almost axiomatic that understanding things helps. It helps in ways that you can’t predict before you understand those things. The entire history of science is just plans constantly failing and people constantly running into discoveries accidentally. I think it’s really easy to do stuff that’s non-robust in this field, so I am much more excited about people doing things that are robust in the sense that they push forward the frontier of knowledge.” - Richard Ngo

Richard pointed at the work of Epoch AI as an example of good solid fundamental research and compared it to some of the reports written by Open Philanthropy that are too high-level to be robust in his eyes.

I’ve always felt unsure about work that just generally improves our understanding of AI, because I’ve been worried that it will help AI developers improve the capabilities of AI systems faster, which gives us less time to prepare for crunch time. But through the course of this project, the respondents have** convinced me that increasing understanding is on average a good thing**.

“There are a bunch of cars driving in this foggy landscape and it turns out, unknown to them, there are spikes all over the landscape and there’s a cliff at the end, but there’s also big piles of gold along the way. Do you clear the fog? I feel if the cars are generally driving in the direction of the spikes and the cliff, you should clear the fog, even though that means the cars are going to be moving faster to try to weave to the gold, because otherwise the default course involves hitting the spikes or running off the cliff.” - Ajeya Cotra

Slowdowns & Pauses

3 respondents advocated for slowing down AI development in one way or another, to give the world more time to prepare for the first potentially dangerous AI systems (but one respondent was explicitly against this). AI capabilities can be slowed down due to the red tape of regulation or by implementing a coordinated pause.

Ben Cottier emphasized buying time to be a useful goal because he’s not optimistic about our ability to find good alignment strategies. We’ll find a safe way to build AGI eventually, but we need enough time to try out enough different approaches to find the correct approach.

One respondent, Alex Turner, would prefer to live in a world where the natural pace is slower, but disagrees with the proposals to pause AI development because he sees it as a panicked response to technical threat models that he considers baseless and nonsensical.

Open source

Nora Belrose’s main concern for the future of AI was extreme inequality rather than AI takeover. She argued that we can combat AI-induced inequality by advocating for and accelerating the development of open-source AI. She pointed out that open-sourcing might cause overall AI capabilities progress to slow down, since, for example, Mistral is reducing OpenAI’s revenue, which means OpenAI has fewer resources to invest in new capabilities. Nora acknowledged that open source increases the risk of misuse, but doesn’t consider things like terrorism a big enough risk to make open source bad overall.

“People who contribute to the Linux kernel are not usually worried about how this is gonna make the Linux kernel a little bit better for terrorists” - Nora Belrose

Most promising research directions

Q4 What research direction (or other activity) do you think will reduce existential risk the most, and what is its theory of change? Could this backfire in some way?

I would often phrase the last sentence as “could this speed up the development of AI capabilities?” and participants would commonly push back on this way of thinking. All useful safety research can, in principle, contribute to the progress in AI capabilities. But what are you going to do, not do any safety research?

“Things that contribute to raw horsepower without contributing anything about understandability or control are negative. And then things that contribute hugely to our ability to understand the situation and control systems are good to do even if they accelerate progress. And a lot of them will accelerate progress somewhat.” - Ajeya Cotra

Richard offered a distinction that he preferred: engineering vs science. “Engineering” is work towards building AI systems that are as powerful as possible, as fast as possible, without necessarily understanding everything about the system or how it will behave. “Science” is work towards understanding machine learning systems, which one can use to predict the behavior of the next frontier model and ultimately learn how to build it safely.

Mechanistic interpretability

“I’d put mechanistic interpretability in the ‘big if true’ category" - Neel Nanda

“It’s hard to imagine succeeding without it, unless we just get lucky.” - Evan Hubinger

The most popular answer, at 6 votes (but with 2 negative votes), was mechanistic interpretability (a.k.a. mechinterp): Find ways to reverse engineer neural networks, similar to how one might reverse engineer a compiled binary computer program (3 min explainer, longer intro).

Mechinterp by itself will not solve all of the problems of AI safety, but it may be beneficial to many different components of the safety agenda. It could be useful for:

- Auditing AI systems for dangerous properties like deception before they are deployed.

- Supplying safety metrics as a target for alignment approaches.

- Monitoring AI systems as they are running to look out for dangerous changes in behavior, e.g. goal misgeneralisation or treacherous turns.

- Deconfusion of threat models. For example, can we confirm that stories of goal-directed AI systems taking over are possible by empirically searching for long-term planning or goal-directedness inside neural networks?

- Automating AI safety research.

- Enabling human feedback methods, e.g., interpretability-assisted red-teaming & adversarial training.

- Debugging high-profile failures (e.g., something like the 2010 flash crash but precipitated by advanced AI) to learn from what went wrong.

Some think of mechinterp as a high-potential but speculative bet. That is, we don’t yet know how tractable mechinterp will turn out to be. It may turn out that neural networks are just fundamentally inscrutable – there is no human-understandable structure in there for us to find. But if it does work, it would be a huge win for safety. For example, mechanistic interpretability may give us a way to know with certainty whether an AI system is being honest with us or not. This is sometimes contrasted with more “direct” approaches like scalable oversight: contributing to scalable oversight gives a small but reliable improvement in the safety of AI systems.

Evan Hubinger had a somewhat different view: he considered mechinterp to be essential to building safe AI systems. He considers deception to be the main dangerous property we should be testing for in AI systems and argued that mechinterp is the only way we can totally rule out deception. He discussed how alternative approaches to searching for deception will not be reliable enough:

“So I’m gonna try to find deception with some algorithm: I set up my search procedure and I have a bunch of inductive biases, and a loss function. It may be the case that the search procedure just doesn’t find deceptive things. But currently at least, we have very little ability to understand how changing the parameters of your search changes the likelihood of finding a deceptive model, right? You can tinker with it all you want, and maybe tinkering with it actually has a huge impact. But if you don’t know what the direction of that impact is, it’s not that helpful. The thing that actually lets you understand whether in fact the model is doing some deceptive thing in a relatively robust way is interpretability” - Evan Hubinger

Black box evaluations

4 people were excited about black box evaluations – ways of testing a model for dangerous properties by studying its external behavior. If mechanistic interpretability is neuroscience, then black box evaluations is behavioral psychology. Here’s an example of this kind of work.

Black box evaluations have qualitatively all of the same benefits as mechinterp listed above, but in a more limited way (mechinterp gives us guarantees, black box evaluations gives us easy wins). Ajeya Cotra and Ryan Greenblatt reckoned that more work should be going into black box evaluations relative to mechinterp than is the case right now.

“We have a lot of traction on this thing [black box evaluations] that could get up to 85% of what we need, and we have no traction on this other thing [mechinterp] and no good definition for it. But people have in their hearts that it could get us to 100% if we made breakthroughs, but I don’t think we necessarily have the time.” - Ajeya Cotra

The concrete recommendations that came up were: capabilities evaluations, externalized reasoning oversight (short & long intro), red-teaming (see here), and eliciting latent knowledge (see here).

Governance research and technical research useful for governance

4 respondents want more people to do work that will help AI be effectively governed.

David Krueger was interested in work that motivates the need for governance. Those outside AI circles, including policymakers, don’t yet understand the risks involved.

“It’s hard for people to believe that the problem is as bad as it actually is. So any place where they have gaps in their knowledge, they will fill that in with wildly optimistic assumptions.” - David Krueger

We should communicate more technical information to policymakers, like pointing out that we don’t understand how neural networks work internally, robustness has not been solved even though it’s been an open problem for 10 years, making threat models more specific and concrete, and showing effective demos of dangerous behaviors in AI.

David also suggested “showing what you can and can’t accomplish”:

“Say you want to prevent large-scale use of agentic AI systems to manipulate people’s political beliefs. Is this a reasonable thing to expect to accomplish through banning that type of use, or do you need to think about controlling the deployment of these systems?” - David Krueger

Ben focussed on compute governance: investigating questions like “how can an international watchdog detect if a certain party is training a large model?”.

Ben conceded that regulation has the potential to backfire, in that it causes “careful” countries to slow down relative to other more “reckless” countries. This could lead the first country to develop AGI to be one that would develop it in an unsafe way. It sounds like we need to strike some balance here. David also warned that just passing a laws may not be enough:

“You might also have to worry about shifting norms that might underwrite the legitimacy of the policy. There’s lots of laws that are widely viewed as illegitimate or only having some limited legitimacy., Speed limits are not considered seriously by most people as something that you absolutely must obey, we all expect that people are going to speed to some extent, it’s very normalized. I expect the incentive gradients here are going to be very strong towards using AI for more and more stuff, and unless we are really able to police the norms around use effectively, it’s going to get really hard to avoid that.” - David Krueger

Other technical work

2 respondents were interested in ways to control potentially dangerous AI systems besides influencing their goals:

“We should be setting up the technical intervention necessary to accurately check whether or not AIs could bypass control countermeasures, then also making better countermeasures that ensure we’re more likely to catch AIs or otherwise prevent them from doing bad actions.” - Ryan Greenblatt

Ben mentioned research into how to build off-switches, so we can stop a rogue AI in its tracks. It’s a non-trivial problem to design a way to quickly shut down an AI system, because we design the data centers that AI systems run on with robustness principles: they are designed to continue running through power outages and the like.

Adam was an advocate for researching AI robustness: how to design AI that is robust to adversarial attacks. Robustness is crucial to scalable oversight: most proposed oversight approaches require adversarially robust overseers:

“We already have a number of alignment approaches that involve one AI system providing supervision to another system […] if every system in this hierarchy can be exploited, then you’re very likely to just get a bunch of systems hacking each other that will be quite difficult to detect.” - Adam Gleave

It’s also useful for preventing misuse: if we can make LLMs harder to jailbreak, then it will be harder for individuals to use them in damaging ways.

Gillian Hadfield’s framing of AI safety was all about making sure AI has normative competence: the ability to infer the rules of society from observation. So the technical work she was interested in was learning how to build normatively competent systems. A normatively competent AI is different from an aligned “good little obedient model”, because:

“These days, there are a lot of signs that say you must wear a mask or stand six feet apart. But we’re all normatively competent to know that those are not actually the rules anymore. Now, maybe some environments are what they are. Maybe I’m in a hospital, or maybe I’m in an environment with a community that is getting anxious about COVID again. So that normative competence requires reading what the equilibrium is.” - Gillan Hadfield

She is currently working on multi-agent reinforcement learning experiments to find out if reinforcement learning can imbue normative competence in agents.

Other honorable mentions included singular learning theory, steering vectors, and shard theory.

3: What mistakes has the AI safety movement made?

“Yeah, probably most things people are doing are mistakes. This is just some random group of people. Why would they be making good decisions on priors? When I look at most things people are doing, I think they seem not necessarily massively mistaken, but they seem somewhat confused or seem worse to me by like 3 times than if they understood the situation better.” - Ryan Greenblatt

“If we look at the track record of the AI safety community, it quite possibly has been harmful for the world.” - Adam Gleave

“Longtermism was developed basically so that AI safety could be the most important cause by the utilitarian EA calculus. That’s my take.” - Holly Elmore

Participants pointed to a range of mistakes they thought the AI safety movement had made. Key themes included an overreliance on theoretical argumentation, being too insular, putting people off by pushing weird or extreme views, supporting the leading AGI companies, insufficient independent thought, advocating for an unhelpful pause to AI development, and ignoring policy as a potential route to safety.

The following is a summary of the main themes that came up in my interviews. Many of the themes overlap with one another, and the way I’ve clustered the criticisms is likely not the only reasonable categorization.

Too many galaxy-brained arguments & not enough empiricism

“I don’t find the long, abstract style of investigation particularly compelling.” - Adam Gleave

9 respondents were concerned about an overreliance or overemphasis on certain kinds of theoretical arguments underpinning AI risk: namely Yudkowsky’s arguments in the sequences and Bostrom’s arguments in Superintelligence.

“All these really abstract arguments that are very detailed, very long and not based on any empirical experience. […]

Lots of trust in loose analogies, thinking that loose analogies let you reason about a topic you don’t have any real expertise in. Underestimating the conjunctive burden of how long and abstract these arguments are. Not looking for ways to actually test these theories. […]

You can see Nick Bostrom in Superintelligence stating that we shouldn’t use RL to align an AGI because it trains the AI to maximize reward, which will lead to wireheading. The idea that this is an inherent property of RL is entirely mistaken. It may be an empirical fact that certain minds you train with RL tend to make decisions on the basis of some tight correlate of their reinforcement signal, but this is not some fundamental property of RL.”

- Alex Turner

Jamie Bernardi argued that the original view of what AGI will look like, namely an RL agent that will reason its way to general intelligence from first principles, is not the way things seem to be panning out. The cutting-edge of AI today is not VNM-rational agents who are Bayesianly-updating their beliefs and trying to maximize some reward function. The horsepower of AI is instead coming from oodles of training data. If an AI becomes power-seeking, it may be because it learns power-seeking from humans, not because of instrumental convergence!

There was a general sense that the way we make sense of AI should be more empirical. Our stories need more contact with the real world – we need to test and verify the assumptions behind the stories. While Adam Gleave overall agreed with this view, he also warned that it’s possible to go too far in the other direction, and that we must strike a balance between the theoretical and the empirical.

Problems with research

This criticism of “too much theoretical, not enough empirical” also applied to the types of research we are doing. 4 respondents focussed on this. This was more a complaint about past research, folks were typically more positive about the amount of empirical work going on now.

2 people pointed at MIRI’s overreliance on idealized models of agency in their research, like AIXI. Adrià Garriga-Alonso thought that infrabayesianism, parts of singular learning theory and John Wentworth’s research programs are unlikely to end up being helpful for safety:

“I think the theory-only projects of the past did not work that well, and the current ones will go the same way.” - Adrià Garriga-Alonso

Evan Hubinger pushed back against this view by defending MIRI’s research approach. He pointed out that, when a lot of this very theoretical work was being done, there wasn’t much scope to do more empirical work because we had no highly capable general-purpose models to do experiments on – theoretical work was the best we could do!

“Now it’s very different. Now, I think the best work to do is all empirical. Empirical research looks really good right now, but it looked way less good three, four years ago. It’s just so much easier to do good empirical work now that the models are much smarter.” - Evan Hubinger

Too insular

8 participants thought AI safety was too insular: the community has disvalued forming alliances with other groups and hasn’t integrated other perspectives and disciplines.

2 of the 8 focussed on AI safety’s relationship with AI ethics. Many in AI safety have been too quick to dismiss the concerns of AI ethicists that AI could exacerbate current societal problems like racism, sexism and concentration of power, on the grounds of extinction risk being “infinitely more important”. But AI ethics has many overlaps with AI safety both technically and policy:

“Many of the technical problems that I see are the same. If you’re trying to align a language model, preventing it from saying toxic things is a great benchmark for that. In most cases, the thing we want on an object level is the same! We want more testing of AI systems, we want independent audits, we want to make sure that you can’t just deploy an AI system unless it meets some safety criteria.” - Adam Gleave

In environmentalism, some care more about the conservation of bird species, while others are more concerned about preventing sea level rise. Even though these two groups may have different priorities, they shouldn’t fight because they have agree on many important subgoals, and have many more priorities in common with each other than with, for example, fossil fuel companies. Building a broader coalition could be similarly important for AI safety.

Another 2 respondents argued that AI safety needs more contact with academia. A big fraction of AI safety research is only shared via LessWrong or the Alignment Forum rather than academic journals or conferences. This can be helpful as it speeds up the process of sharing research by sidestepping “playing the academic game” (e.g. tuning your paper to fit into academic norms), but has the downside that research typically receives less peer review, leading to on average lower quality posts on sites like LessWrong. Much of AI safety research lacks the feedback loops that typical science has. AI safety also misses out on the talent available in the broader AI & ML communities.

Many of the computer science and math kids in AI safety do not value insights from other disciplines enough, 2 respondents asserted. Gillian Hadfield argued that many AI safety researchers are getting norms and values all wrong because we don’t consult the social sciences. For example: STEM people often have an assumption that there are some norms that we can all agree on (that we call “human values”), because it’s just “common sense”. But social scientists would disagree with this. Norms and values are the equilibria of interactions between individuals, produced by their behaviors, not some static list of rules up in the sky somewhere.

Another 2 respondents accused the rationalist sphere of using too much jargony and sci-fi language. Esoteric phrases like “p(doom)”, “x-risk” or “HPMOR” can be off-putting to outsiders and a barrier to newcomers, and give culty vibes. Noah conceded that shorthands can be useful to some degree (for example they can speed up idea exchange by referring to common language rather than having to re-explain the same concept over and over again), but thought that on the whole AI safety has leaned too much in the jargony direction.

Ajeya Cotra thought some AI safety researchers, like those at MIRI, have been too secretive about the results of their research. They do not publish their findings due to worries that a) their insights will help AI developers build more capable AI, and b) they will spread AGI hype and encourage more investment into building AGI (although Adam considered that creating AI hype is one of the big mistakes AI safety has made, on balance he also thought many groups should be less secretive). If a group is keeping their results secret, this is in fact a sign that they aren’t high quality results. This is because a) the research must have received little feedback or insights from other people with different perspectives, and b) if there were impressive results, there would be more temptation to share it.

Holly Elmore suspected that this insular behavior was not by mistake, but on purpose. The rationalists wanted to only work with those who see things the same way as them, and avoid too many “dumb” people getting involved. She recalled conversations with some AI safety people who lamented that there are too many stupid or irrational newbies flooding into AI safety now, and the AI safety sphere isn’t as fun as it was in the past.

Bad messaging

“As the debate becomes more public and heated, it’s easy to fall into this trap of a race to the bottom in terms of discourse, and I think we can hold better standards. Even as critics of AI safety may get more adversarial or lower quality in their criticism, it’s important that we don’t stoop to the same level. […] Polarization is not the way to go, it leads to less action.” - Ben Cottier

6 respondents thought AI safety could communicate better with the wider world. The AI safety community do not articulate the arguments for worrying about AI risk well enough, come across as too extreme or too conciliatory, and lean into some memes too much or not enough.

4 thought that some voices push views that are too extreme or weird (but one respondent explicitly pushed against this worry). Yudkowsky is too confident that things will go wrong, and PauseAI is at risk of becoming off-putting if they continue to lean into the protest vibe. Evan thought Conjecture has been doing outreach badly – arguing against sensible policy proposals (like responsible scaling policies) because they don’t go far enough. David Krueger however leaned in the opposite direction: he thought that we are too scared to use sensationalist language like “AI might take over”, while in fact, this language is good for getting attention and communicating concerns clearly.

Ben Cottier lamented the low quality of discourse around AI safety, especially in places like Twitter. We should have a high standard of discourse, show empathy to the other side of the debate, and seek compromises (with e.g. open source advocates). The current bad discourse is contributing to polarization, and nothing gets done when an issue is polarized. Ben also thought that AI safety should have been more prepared for the “reckoning moment” of AI risk becoming mainstream, so we had more coherent articulations of the arguments and reasonable responses to the objections.

Some people say that we shouldn’t anthropomorphize AI, but Nora Belrose reckoned we should do it more! Anthropomorphising makes stories much more attention-grabbing (it is “memetically fit”). One of the most famous examples of AI danger has been Sydney: Microsoft’s chatbot that freaked people out by being unhinged in a very human way.

AI safety’s relationship with the leading AGI companies

“Is it good that the AI safety community has collectively birthed the three main AI orgs, who are to some degree competing, and maybe we’re contributing to the race to AGI? I don’t know how true that is, but it feels like it’s a little bit true.

If the three biggest oil companies were all founded by people super concerned about climate change, you might think that something was going wrong.”

- Daniel Filan

Concern for AI safety had at least some part to play in the founding of OpenAI, Anthropic and DeepMind. Safety was a stated primary concern that drove the founding of OpenAI. Anthropic was founded by researchers who left OpenAI because it wasn’t sufficiently safety-conscious. Shane Legg, one of DeepMind’s co-founders, is on record for being largely motivated by AI safety. Their existence is arguably making AGI come sooner, and fuelling a race that may lead to more reckless corner-cutting in AI development. 5 respondents thought the existence of these three organizations is probably a bad thing.

Jamie thought the existence of OpenAI may be overall positive though, due to their strategy of widely releasing models (like ChatGPT) to get the world experienced with AI. ChatGPT has thrust AI into the mainstream and precipitated the recent rush of interest in the policy world.

3 respondents also complained that the AI safety community is too cozy with the big AGI companies. A lot of AI safety researchers work at OpenAI, Anthropic and DeepMind. The judgments of these researchers may be biased by a conflict of interest: they may be incentivised for their company to succeed in getting to AGI first. They will also be contractually limited in what they can say about their (former) employer, in some cases even for life.

Adam recommended that AI safety needs more voices who are independent of corporate interests, for example in academia. He also recommended that we shouldn’t be scared to criticize companies who aren’t doing enough for safety.

While Daniel Filan was concerned about AI safety’s close relationship with these companies, he conceded that there must be a balance between inside game (changing things from the inside) and outside game (putting pressure on the system from the outside). AI safety is mostly playing the inside game – get involved with the companies who are causing the problem, to influence them to be more careful and do the right thing. In contrast, the environmentalism movement largely plays an outside game – not getting involved with oil companies but protesting them from the outside. Which of these is the right way to make change happen? Seems difficult to tell.

The bandwagon

“I think there’s probably lots of people deferring when they don’t even realize they’re deferring.” - Ole Jorgensen

Many in the AI safety movement do not think enough for themselves, 4 respondents thought. Some are too willing to adopt the views of a small group of elites who lead the movement (like Yudkowsy, Christiano and Bostrom). Alex Turner was concerned about the amount of “hero worship” towards these thought leaders. If this small group is wrong, then the entire movement is wrong. As Jamie pointed out, AI safety is now a major voice in the AI policy world – making it even more concerning that AI safety is resting on the judgements of such a small number of people.

“There’s maybe some jumping to like: what’s the most official way that I can get involved in this? And what’s the community-approved way of doing this or that? That’s not the kind of question I think we should be asking.” - Daniel Filan

Pausing is bad

3 respondents thought that advocating for a pause to AI development is bad, while 1 respondent was pro-pause.5 Nora referred me to a post she wrote arguing that pausing is bad. In that post, she argues that pausing will a) reduce the quality of alignment research because researchers will be forced to test their ideas on weak models, b) make a hard takeoff more likely when the pause is lifted, and c) push capabilities research underground, where regulations are looser.

Discounting public outreach & governance as a route to safety

Historically, the AI safety movement has underestimated the potential of getting the public on-side and getting policy passed, 3 people said. There is a lot of work in AI governance these days, but for a long time most in AI safety considered it a dead end. The only hope to reduce existential risk from AI was to solve the technical problems ourselves, and hope that those who develop the first AGI implement them. Jamie put this down to a general mistrust of governments in rationalist circles, not enough faith in our ability to solve coordination problems, and a general dislike of “consensus views”.

Holly thought there was a general unconscious desire for the solution to be technical. AI safety people were guilty of motivated reasoning that “the best way to save the world is to do the work that I also happen to find fun and interesting”. When the Singularity Institute pivoted towards safety and became MIRI, they never gave up on the goal of building AGI – just started prioritizing making it safe.

“Longtermism was developed basically so that AI safety could be the most important cause by the utilitarian EA calculus. That’s my take.” - Holly Elmore

She also condemned the way many in AI safety hoped to solve the alignment problem via “elite shady back-room deals”, like influencing the values of the first AGI system by getting into powerful positions in the relevant AI companies.

Richard Ngo gave me similar vibes, arguing that AI safety is too structurally power-seeking: trying to raise lots of money, trying to gain influence in corporations and governments, trying to control the way AI values are shaped, favoring people who are concerned about AI risk for jobs and grants, maintaining the secrecy of information, and recruiting high school students to the cause. We can justify activities like these to some degree, but Richard worried that AI safety was leaning too much in this direction. This has led many outside of the movement to deeply mistrust AI safety (for example).

“From the perspective of an external observer, it’s difficult to know how much to trust stated motivations, especially when they tend to lead to the same outcomes as deliberate power-seeking.” - Richard Ngo

Richard thinks that a better way for AI safety to achieve its goals is to instead gain more legitimacy by being open, informing the public of the risks in a legible way, and prioritizing competence.

More abstractly, both Holly and Richard reckoned that there is too much focus on individual impact in AI safety and not enough focus on helping the world solve the problem collectively. More power to do good lies in the hands of the public and governments than many AI safety folk and effective altruists think. Individuals can make a big difference by playing 4D chess, but it’s harder to get right and often backfires.

“The agent that is actually having the impact is much larger than any of us, and in some sense, the role of each person is to facilitate the largest scale agent, whether that be the AI safety community or civilization or whatever. Impact is a little meaningless to talk about, if you’re talking about the impact of individuals in isolation.” - Richard Ngo

Conclusion

While a lot of the answers were pretty unsurprising, there was in general more disagreement than I was expecting. While many expect the first human-level AI to be quite similar to today’s LLMs, a sizable minority gave reasons to doubt this. While the most common existential risk story was the classic AI takeover scenario, there were a number of interesting alternatives argued for.

When asked how AI safety might prevent disaster, respondents focussed most on 1) the technical solutions we might come up with, 2) spreading a safety mindset through AI research, 3) promoting sensible AI regulation, and 4) building a fundamental science of AI. The research directions people were most excited about were mechanistic interpretability, black box evaluations, and governance research.

Participants pointed to a range of mistakes they thought the AI safety movement had made. An overreliance on overly theoretical argumentation, being too insular, putting the public off by pushing weird or extreme views, supporting the leading AGI companies, not enough independent thought, advocating for an unhelpful pause to AI development, and ignoring policy as potential a route to safety.

Personally, I’m feeling considerably less nihilistic about AI safety after talking to all these people about how we can improve things. The world is complicated and there’s still a chance we get things wrong, but working hard to understand the problem and propose solutions seems a lot better than inaction. I’m also now more sympathetic to the view that we should just be improving the general understanding of the problem (both scientifically and to the public), instead of trying to intentionally nudge AI development in a particular direction through complicated strategies and back-room deals and playing 4D chess.

Notes

- I’m oversimplifying things here - in reality there is a spectrum of “how much HLAI will look like an LLM”. ↩︎

- By LLMs I really mean transformer-based multimodal models, the state-of-the-art do not just work with language. But these multimodal models are still typically referred to as LLMs, so I will use that terminology here too. ↩︎

- Thanks to Quintin Tyrell Davis for this helpful phrasing. ↩︎

- Figures from time of writing, April 2024. ↩︎

- Other respondents may also have been pro or anti-pause, but since the pause debate did not come up in their interviews I didn’t learn what their positions on this issue were. ↩︎

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

What do AI safety experts believe about the big picture of AI risk? How might things go wrong, what we should do about it, and how have we done so far? Does everybody in AI safety agree on the fundamentals? Which views are consensus, which are contested and which are fringe? Maybe we could learn this from the literature (as in the MTAIR project), but many ideas and opinions are not written down anywhere, they exist only in people’s heads and in lunchtime conversations at AI labs and coworking spaces.

I set out to learn what the AI safety community believes about the strategic landscape of AI safety. I conducted 17 semi-structured interviews with a range of AI safety experts. I avoided going into any details of particular technical concepts or philosophical arguments, instead focussing on how such concepts and arguments fit into the big picture of what AI safety is trying to achieve.