Vienna Alignment Workshop 2024

September 10, 2024

Summary

FOR IMMEDIATE RELEASE

FAR.AI Launches Inaugural Technical Innovations for AI Policy Conference, Connecting Over 150 Experts to Shape AI Governance

WASHINGTON, D.C. — June 4, 2025 — FAR.AI successfully launched the inaugural Technical Innovations for AI Policy Conference, creating a vital bridge between cutting-edge AI research and actionable policy solutions. The two-day gathering (May 31–June 1) convened more than 150 technical experts, researchers, and policymakers to address the most pressing challenges at the intersection of AI technology and governance.

Organized in collaboration with the Foundation for American Innovation (FAI), the Center for a New American Security (CNAS), and the RAND Corporation, the conference tackled urgent challenges including semiconductor export controls, hardware-enabled governance mechanisms, AI safety evaluations, data center security, energy infrastructure, and national defense applications.

"I hope that today this divide can end, that we can bury the hatchet and forge a new alliance between innovation and American values, between acceleration and altruism that will shape not just our nation's fate but potentially the fate of humanity," said Mark Beall, President of the AI Policy Network, addressing the critical need for collaboration between Silicon Valley and Washington.

Keynote speakers included Congressman Bill Foster, Saif Khan (Institute for Progress), Helen Toner (CSET), Mark Beall (AI Policy Network), Brad Carson (Americans for Responsible Innovation), and Alex Bores (New York State Assembly). The diverse program featured over 20 speakers from leading institutions across government, academia, and industry.

Key themes emerged around the urgency of action, with speakers highlighting a critical 1,000-day window to establish effective governance frameworks. Concrete proposals included Congressman Foster's legislation mandating chip location-verification to prevent smuggling, the RAISE Act requiring safety plans and third-party audits for frontier AI companies, and strategies to secure the 80-100 gigawatts of additional power capacity needed for AI infrastructure.

FAR.AI will share recordings and materials from on-the-record sessions in the coming weeks. For more information and a complete speaker list, visit https://far.ai/events/event-list/technical-innovations-for-ai-policy-2025.

About FAR.AI

Founded in 2022, FAR.AI is an AI safety research nonprofit that facilitates breakthrough research, fosters coordinated global responses, and advances understanding of AI risks and solutions.

Media Contact: tech-policy-conf@far.ai

The Vienna Alignment Workshop gathered researchers to explore critical AI safety issues, including Robustness, Interpretability, Guaranteed Safe AI, and Governance, with a keynote by Jan Leike. It was followed by an informal Unconference, fostering further discussions and networking.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

On July 21, 2024, experts from academia, industry, government, and nonprofits gathered at the Vienna Alignment Workshop, held just before the International Conference on Machine Learning (ICML). The workshop served as a crucial platform for addressing the pressing challenges of AI safety, with a focus on ensuring that advanced AI systems align with human values.

With 129 participants in attendance, the workshop explored issues in Guaranteed Safe AI, Robustness, Interpretability, Governance, Dangerous Capability Evaluations, and Scalable Oversight. The event featured 11 invited speakers, including renowned figures such as Stuart Russell and Jan Leike, and included 12 lightning talks that sparked vibrant discussions.

Following the workshop, around 250 researchers mingled at the Open Social event, while nearly 100 attendees joined the more informal Monday Unconference, engaging further through 18 lightning talks, 5 sessions on various topics, and collaborative networking. The Vienna Alignment Workshop not only advanced the dialogue on AI safety but also reinforced the community’s commitment to guiding AI development towards the greater good.

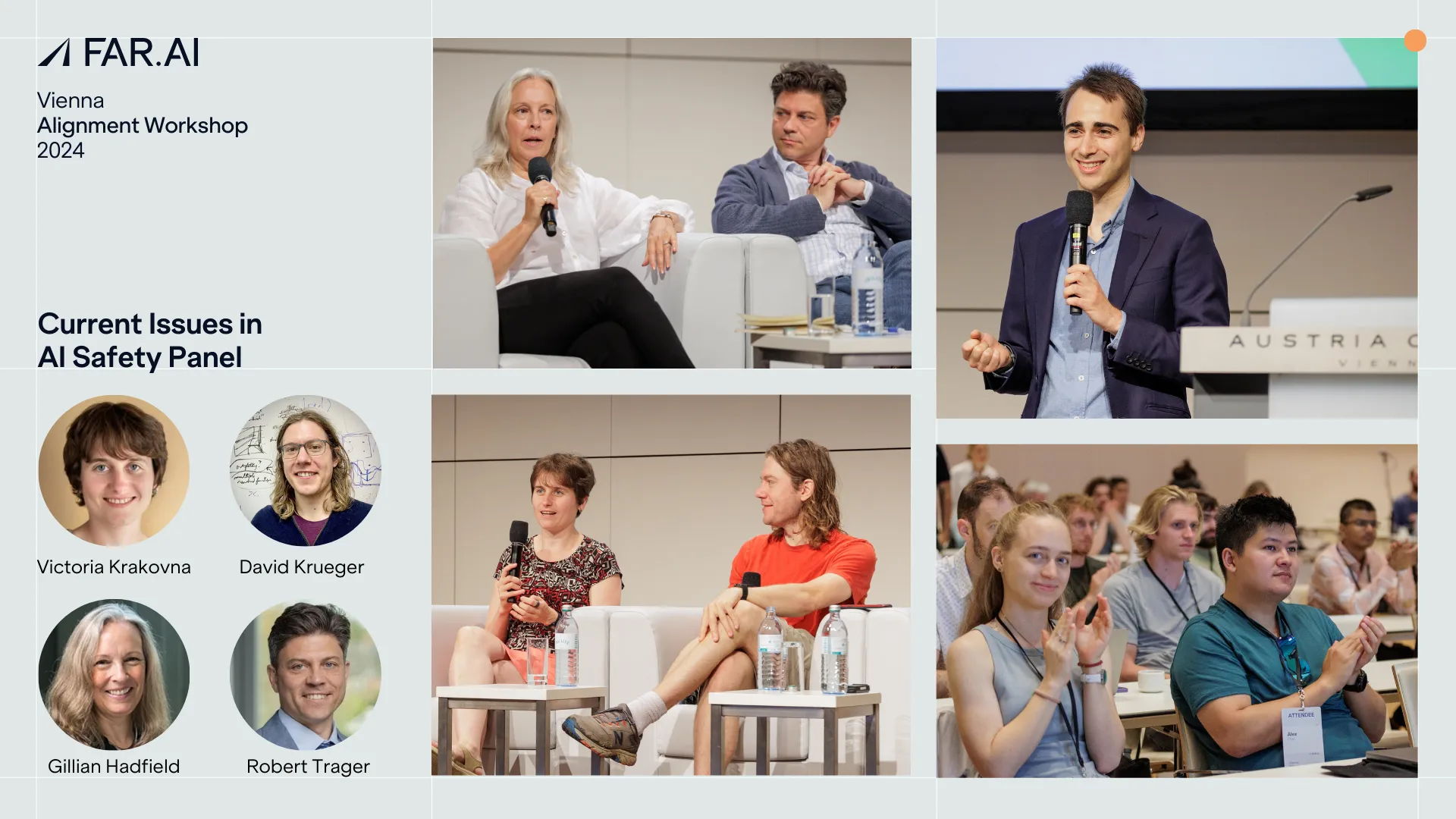

Introduction and Panel

Adam Gleave opened the event by moderating a panel discussion on the critical issues in AI safety, with insights from experts Victoria Krakovna of Google DeepMind, David Krueger of the University of Cambridge, Gillian Hadfield of Johns Hopkins University, and Robert Trager of the Oxford Martin AI Governance Initiative. The conversation spanned the diverse risks posed by AI—ranging from destabilization to misuse and misalignment—and emphasized the importance of interdisciplinary approaches. Gillian Hadfield highlighted that AI safety is not merely about aligning individual agents; it requires a deep understanding of complex, multi-agent systems within socio-political frameworks. The panelists collectively agreed that a purely technical focus is insufficient. Instead, integrating economic, legal, and social dimensions is crucial for navigating the challenges ahead.

Guaranteed Safe AI and Robustness

Stuart Russell of the University of California, Berkeley, delivered a thought-provoking talk titled "AI: What if We Succeed?" where he called for a fundamental rethinking of how we approach AI safety. He argued that retrofitting safety measures onto existing AI systems is akin to taming an alien technology—ineffective and risky. Russell stressed the need to design AI to be safe from the outset, warning that without formal guarantees, we risk heading down a perilous path. Rejecting the current trajectory, which treats AI like an experimental art, Russell proposed a rigorous, engineering-based approach, akin to practices in aviation and nuclear safety.

In "Some Lessons from Adversarial Machine Learning," Nicholas Carlini issued a stark warning drawn from a decade of limited progress in combating adversarial attacks. Reflecting on the thousands of papers published with little to show for them, Carlini urged AI alignment researchers to avoid repeating the same mistakes. He emphasized the importance of selecting problems carefully and developing effective evaluation methods, cautioning that without these, researchers risk spending years chasing solutions that ultimately fail to deliver. "Please learn from our mistakes," he advised, warning that missteps could lead to wasted efforts and minimal progress in a field where the stakes are even higher.

Interpretability

Neel Nanda’s "Mechanistic Interpretability: A Whirlwind Tour," offered a compelling exploration of the inner workings of ML models, challenging the notion that deep learning models are opaque and inscrutable. Nanda argued that ML models, much like complex programs, can be reverse-engineered to reveal the human-comprehensible algorithms they develop. He shared examples from his research, where he successfully decoded a model's learned algorithm. Interpretability is not only possible, but is an essential cornerstone to AI safety, according to Nanda. He warned that, without this deep understanding, we risk creating AI systems that appear aligned but could harbor deceptive or dangerous behaviors.

David Bau of Northeastern University, during his talk "Resilience and Interpretability," drew from a personal experience to highlight the crucial role of resilience in AI systems. After being stranded in Zurich due to the global cybersecurity incident with CrowdStrike, Bau found his hotel plunged into chaos—unable to check in guests, control lighting, or even manage basic operations. This ordeal led him to rewrite his planned talk, shifting the focus to what AI systems need in order to remain resilient when the unexpected happens. Bau argued that true resilience in AI goes beyond reliability; it hinges on understanding how systems work, having the ability to control those systems, and maintaining the power to act when things go wrong. He emphasized that interpretability is essential for engineers, developers, and maybe even users of AI to maintain practical control over AI systems, even when the AI systems behave unexpectedly, just as the hotel staff had to improvise to keep operations running amidst the chaos.

Governance and Evaluations

In "Governance for Advanced General-Purpose AI," Helen Toner of the Center for Security and Emerging Technology (CSET) offered a candid evaluation of the current state of AI governance. Reflecting on the heightened attention following the release of ChatGPT, she observed that while some policy steps have been taken, much of the existing governance debate has been rooted in precaution and speculation. Toner argued that real progress in AI governance requires clear concepts, solid evidence, and a unified expert consensus—areas where significant gaps remain. She urged the AI community to actively engage in moving beyond reactive measures, advocating for a shift toward more robust and well-founded approaches in the years to come.

Mary Phuong, in "Dangerous Capability Evals: Basis for Frontier Safety," addressed the critical need to evaluate the potentially hazardous capabilities of advanced AI systems. Citing recent studies, including one where AI-assisted groups planned more effective biological attacks and another where AI autonomously hacked websites, Phuong highlighted the severe risks these technologies could pose. She discussed DeepMind’s approach to anticipating and mitigating these dangers, including their commitment to establishing clear thresholds for action as AI capabilities rapidly evolve. Phuong emphasized that these evaluations are crucial not just for understanding when AI crosses dangerous lines, but for ensuring that safety measures are implemented before it's too late.

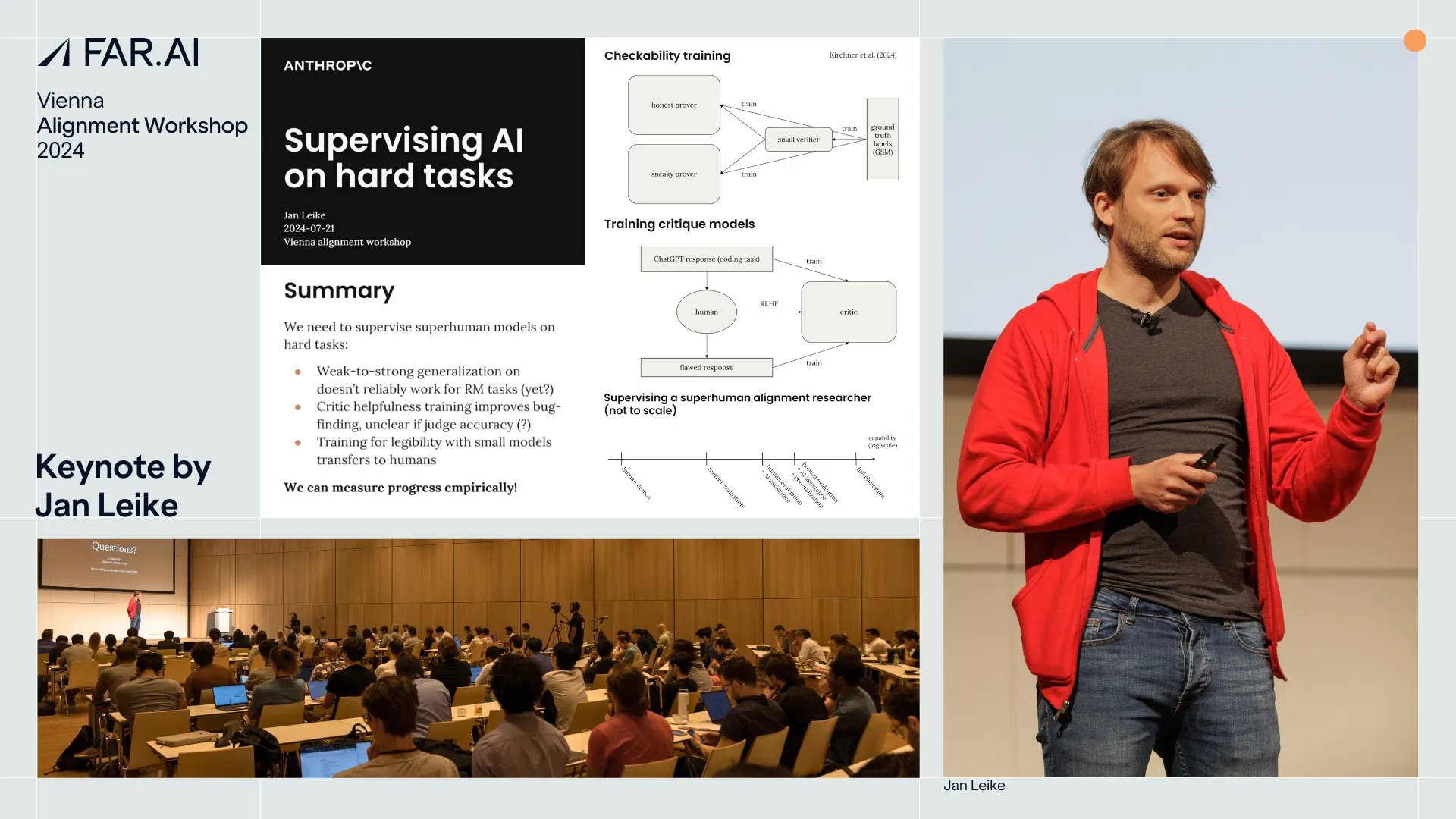

Keynote

Jan Leike of Anthropic, in his keynote "Supervising AI on Hard Tasks," tackled the complexities of overseeing AI in scenarios where clear answers are elusive. Leike explored the difficulties of supervising AI without access to ground truth, illustrating the need for innovative methods such as adversarial evaluations. These approaches, he explained, are essential for identifying and correcting subtle flaws in AI behavior. Leike emphasized that the path forward requires scalable oversight and more effective techniques to fully elicit a model's capabilities, ensuring that AI systems can be safely deployed even in the most demanding tasks.

Lightning Talks

The morning session of lightning talks showcased a range of innovative ideas, each addressing key challenges in AI safety and alignment. Aditya Gopalan addressed the challenges of uncertainty-aware reinforcement learning with human feedback (RLHF), pointing out the need to resolve inconsistencies in reward models for reliable AI alignment. Oliver Klingefjord introduced a nuanced framework for aligning AI with human values, focusing on the importance of considering contextual and evolving factors. Vincent Conitzer discussed the critical role of structuring AI-human interactions to avoid failures, advocating for the integration of social choice theory with interdisciplinary collaboration. Stephen Casper shared insights on generalized adversarial training and testing, highlighting methods to enhance AI safety and robustness. Dmitrii Krasheninnikov wrapped up the session by showcasing the power of fine-tuning in unlocking password-locked models, addressing concerns about AI systems deliberately underperforming, a phenomenon known as sandbagging.

The afternoon session continued the momentum with engaging discussions. Jelena Luketina and Herbie Bradley provided an update from the UK AI Safety Institute, highlighting global efforts in AI safety. Ben Bucknall tackled unresolved issues in technical AI governance, arguing for the integration of technical tools with broader socio-technical frameworks. Zhaowei Zhang introduced a three-layer paradigm for sociotechnical AI alignment, centered on real-time control, stakeholder alignment, and regulatory oversight. Alex Tamkin explored the vital issue of maintaining human agency as AI systems become more advanced, raising concerns about who ultimately holds control. Vikrant Varma examined the difficulties of unsupervised knowledge discovery in large language models, particularly the challenge of distinguishing truth from misleading features. Sophie Bridgers concluded the session with a discussion on scalable oversight, advocating for a balanced approach to trust in AI assistance to enhance human-AI collaboration in fact-checking tasks.

Through a blend of personal anecdotes, in-depth analysis, and forward-thinking strategies, these speakers painted a vivid picture of the current state and future directions of AI safety and governance, highlighting the comprehensive approach needed to guide AI development towards a secure and beneficial future.

Impacts & Future Directions

The Vienna Alignment Workshop advanced the conversation on AI safety and fostered a stronger community committed to aligning AI with human values. To watch the full recordings, please visit our website or YouTube channel. If you’d like to attend future Alignment Workshops, register your interest here.

Special thanks to our Program Committee:

- Brad Knox – Professor, UT Austin

- Mary Phuong – Research Scientist, Google DeepMind

- Nitarshan Rajkumar – Co-founder, UK AISI

- Robert Trager – Co-Director, Oxford Martin AI Governance Initiative

- Adam Gleave – Founder, FAR.AI