Beyond the Board: Exploring AI Robustness Through Go

June 18, 2024

Summary

FOR IMMEDIATE RELEASE

FAR.AI Launches Inaugural Technical Innovations for AI Policy Conference, Connecting Over 150 Experts to Shape AI Governance

WASHINGTON, D.C. — June 4, 2025 — FAR.AI successfully launched the inaugural Technical Innovations for AI Policy Conference, creating a vital bridge between cutting-edge AI research and actionable policy solutions. The two-day gathering (May 31–June 1) convened more than 150 technical experts, researchers, and policymakers to address the most pressing challenges at the intersection of AI technology and governance.

Organized in collaboration with the Foundation for American Innovation (FAI), the Center for a New American Security (CNAS), and the RAND Corporation, the conference tackled urgent challenges including semiconductor export controls, hardware-enabled governance mechanisms, AI safety evaluations, data center security, energy infrastructure, and national defense applications.

"I hope that today this divide can end, that we can bury the hatchet and forge a new alliance between innovation and American values, between acceleration and altruism that will shape not just our nation's fate but potentially the fate of humanity," said Mark Beall, President of the AI Policy Network, addressing the critical need for collaboration between Silicon Valley and Washington.

Keynote speakers included Congressman Bill Foster, Saif Khan (Institute for Progress), Helen Toner (CSET), Mark Beall (AI Policy Network), Brad Carson (Americans for Responsible Innovation), and Alex Bores (New York State Assembly). The diverse program featured over 20 speakers from leading institutions across government, academia, and industry.

Key themes emerged around the urgency of action, with speakers highlighting a critical 1,000-day window to establish effective governance frameworks. Concrete proposals included Congressman Foster's legislation mandating chip location-verification to prevent smuggling, the RAISE Act requiring safety plans and third-party audits for frontier AI companies, and strategies to secure the 80-100 gigawatts of additional power capacity needed for AI infrastructure.

FAR.AI will share recordings and materials from on-the-record sessions in the coming weeks. For more information and a complete speaker list, visit https://far.ai/events/event-list/technical-innovations-for-ai-policy-2025.

About FAR.AI

Founded in 2022, FAR.AI is an AI safety research nonprofit that facilitates breakthrough research, fosters coordinated global responses, and advances understanding of AI risks and solutions.

Media Contact: tech-policy-conf@far.ai

Achieving robustness remains a significant challenge even in narrow domains like Go. We test three approaches to defend Go AIs from adversarial strategies. We find these defenses protect against previously discovered adversaries, but uncover qualitatively new adversaries that undermine these defenses.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

Last year, we showed that supposedly superhuman Go AIs can be beaten by human amateurs playing specific “cyclic” patterns on the board. Vulnerabilities have previously been observed in a wide variety of sub- or near-human AI systems, but this result demonstrates that even far superhuman AI systems can fail catastrophically in surprising ways. This lack of robustness poses a critical challenge for AI safety, especially as AI systems are integrated in critical infrastructure or deployed in large-scale applications. We seek to defend Go AIs, in the process developing insights that can make AI applications in various domains more robust against unpredictable threats.

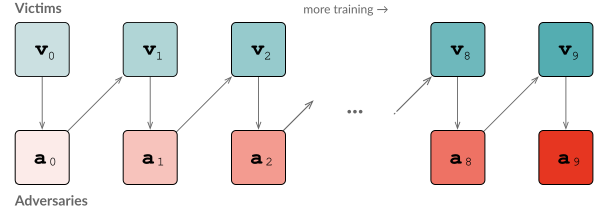

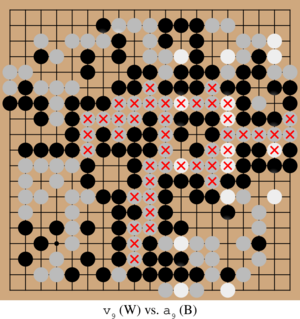

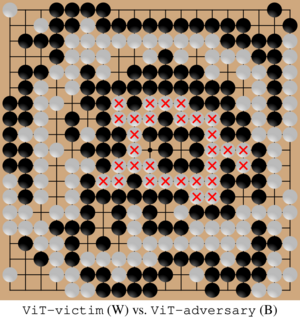

We explored three defense strategies: positional adversarial training on handpicked examples of cyclic patterns, iterated adversarial training against successively fine-tuned adversaries, and replacing convolutional neural networks with vision transformers. We found that the two adversarial training methods defend against the original cyclic attack. However, we also found several qualitatively new adversarial strategies (pictured below) that can overcome all these defenses. Nonetheless, finding these new attacks is more challenging than against an undefended KataGo, requiring more training compute resources for the adversary.

Background

The ancient board game Go has become a popular testing ground for AI development thanks to its simple rules that nonetheless lead to significant strategic depth. The first superhuman Go AI, AlphaGo, defeated top player Lee Sedol in 2016. We now test KataGo, an open-source model that’s even more powerful. These AIs search over possible moves and counter-moves using Monte Carlo Tree Search (MCTS), guided by a neural network that proposes moves and evaluates board states. The neural network is learned through self-play games where the AI competes against itself to refine its decision-making without human input. The resulting AI’s strength is influenced by the visit count: the number of moves evaluated during the search, with higher counts leading to stronger gameplay.

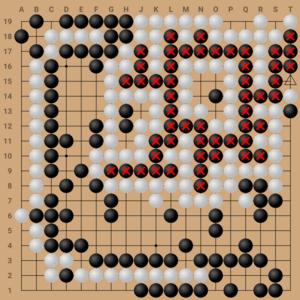

While KataGo excels under standard conditions, we previously found that both KataGo and other superhuman Go AIs can be exploited and can falter when faced with “adversarial attacks”—unexpected strategies that exploit algorithmic blind spots—such as the “cyclic attack” pictured below. This raises a crucial question: as KataGo wasn’t designed with adversarial attacks in mind, are there straightforward ways to enhance its robustness against such exploits? We explore three possible defenses in the following sections.

Positional Adversarial Training

The first approach, positional adversarial training, integrates hand-curated adversarial positions directly into the training data, aiming to preemptively expose and strengthen the AI against known weaknesses. This approach has been taken by KataGo’s developers since we disclosed the original cyclic exploit in December 2022. In particular, the developers have added a mixture of positions from games played against our cyclic adversary, as well as other cyclic positions identified by online players.

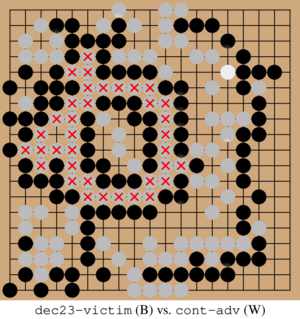

This approach has been successful at defending against the original cyclic adversary. However, we were readily able to train a new adversary to beat the latest KataGo model as of December 2023 using a cyclic-style attack (pictured below). This attacker achieved a 65% win rate against KataGo playing with 4096 visits and a 27% win rate at 65,536 visits. This suggests that while attacks are harder to execute against this latest model, its defenses are still incomplete.

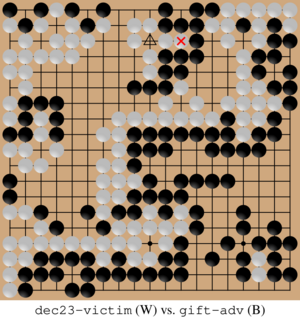

Additionally, we discovered a new non-cyclic vulnerability that we named the “gift attack” as KataGo inexplicably lets the adversary capture two stones. This qualitatively new vulnerability illustrates the challenge of securing AI against evolving adversarial tactics.