Codebook Features: Sparse and Discrete Interpretability for Neural Networks

October 19, 2023

Summary

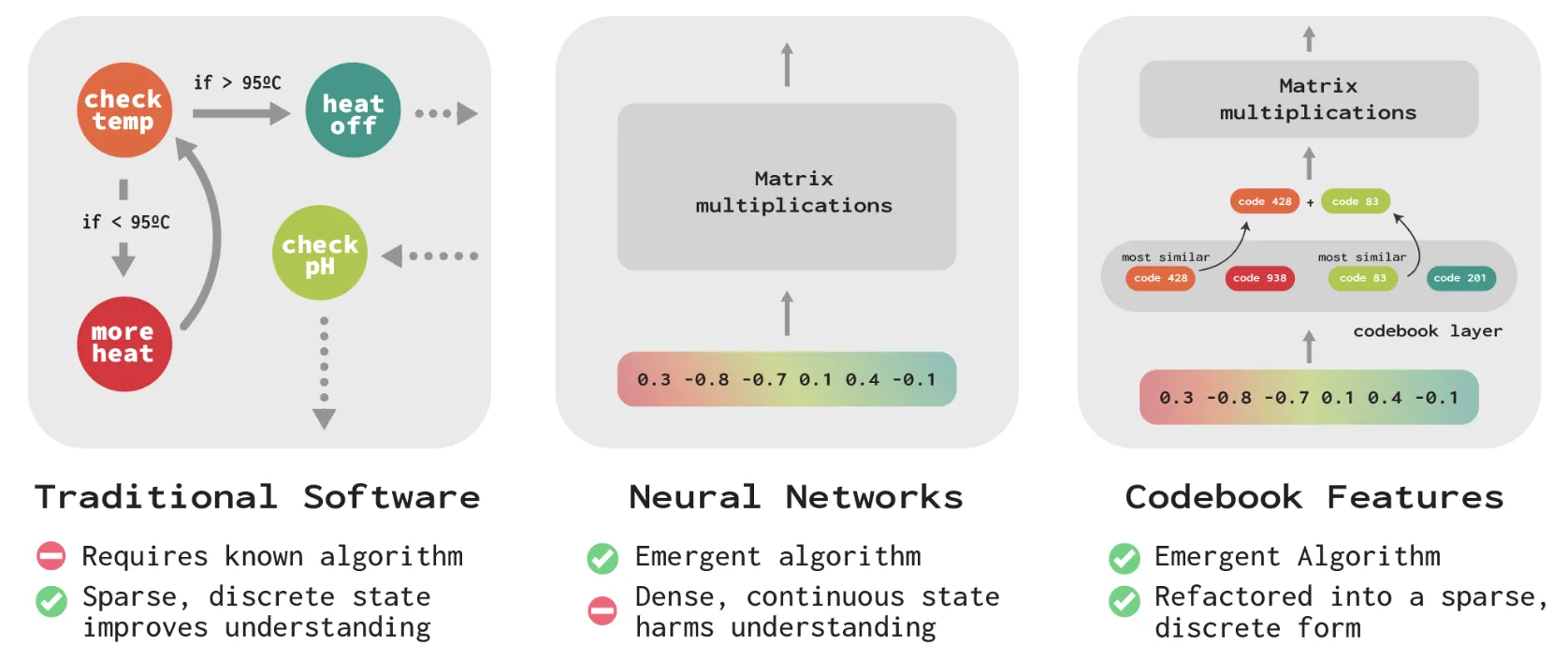

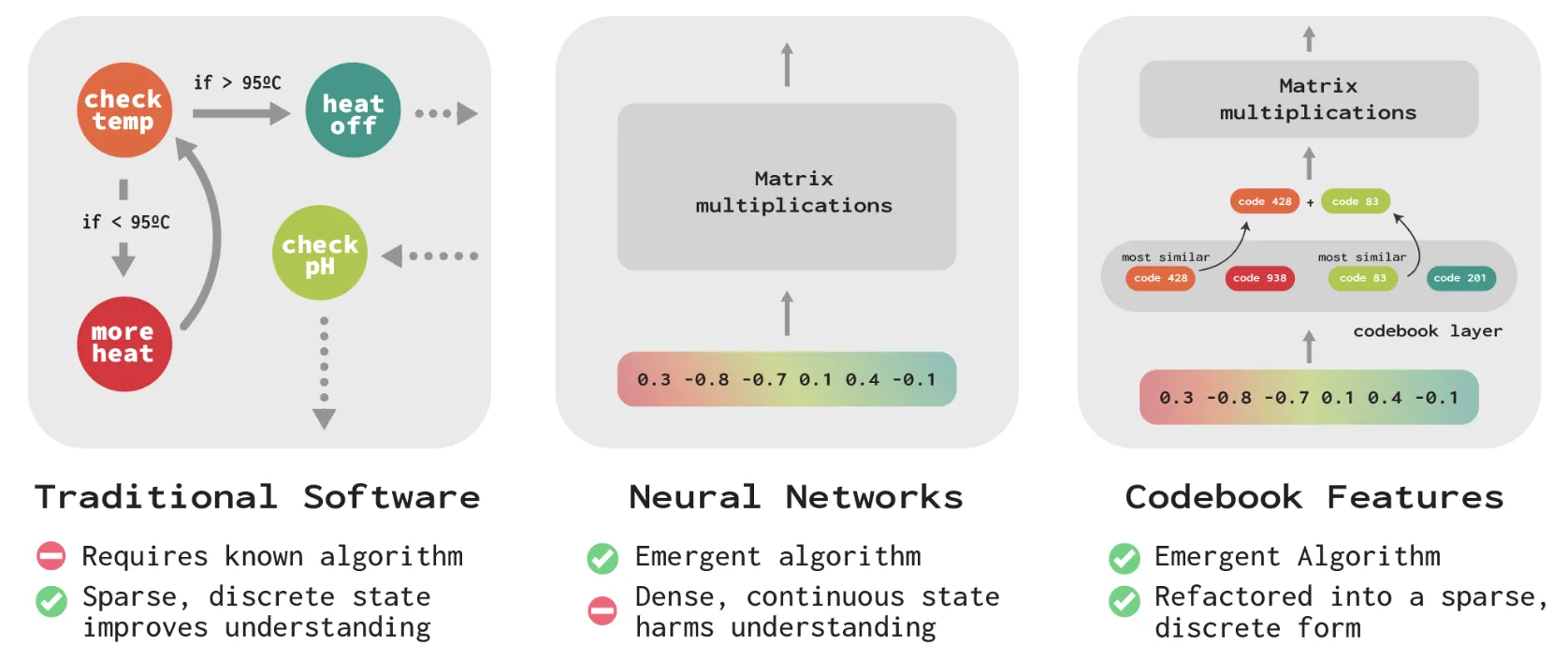

We demonstrate Codebook Features: a way to modify neural networks to make their internals more interpretable and steerable while causing only a small degradation of performance. At each layer, we apply a quantization bottleneck that forces the activation vector into a sum of a few discrete _codes_; converting an inscrutable, dense, and continuous vector into a discrete list of codes from a learned 'codebook' that are either on or off.

We found a way to modify neural networks to make their internals more interpretable and steerable while causing only a small degradation of performance. At each layer, we apply a quantization bottleneck that forces the activation vector into a sum of a few discrete codes; converting an inscrutable, dense, and continuous vector into a discrete list of codes from a learned codebook that are either on or off.

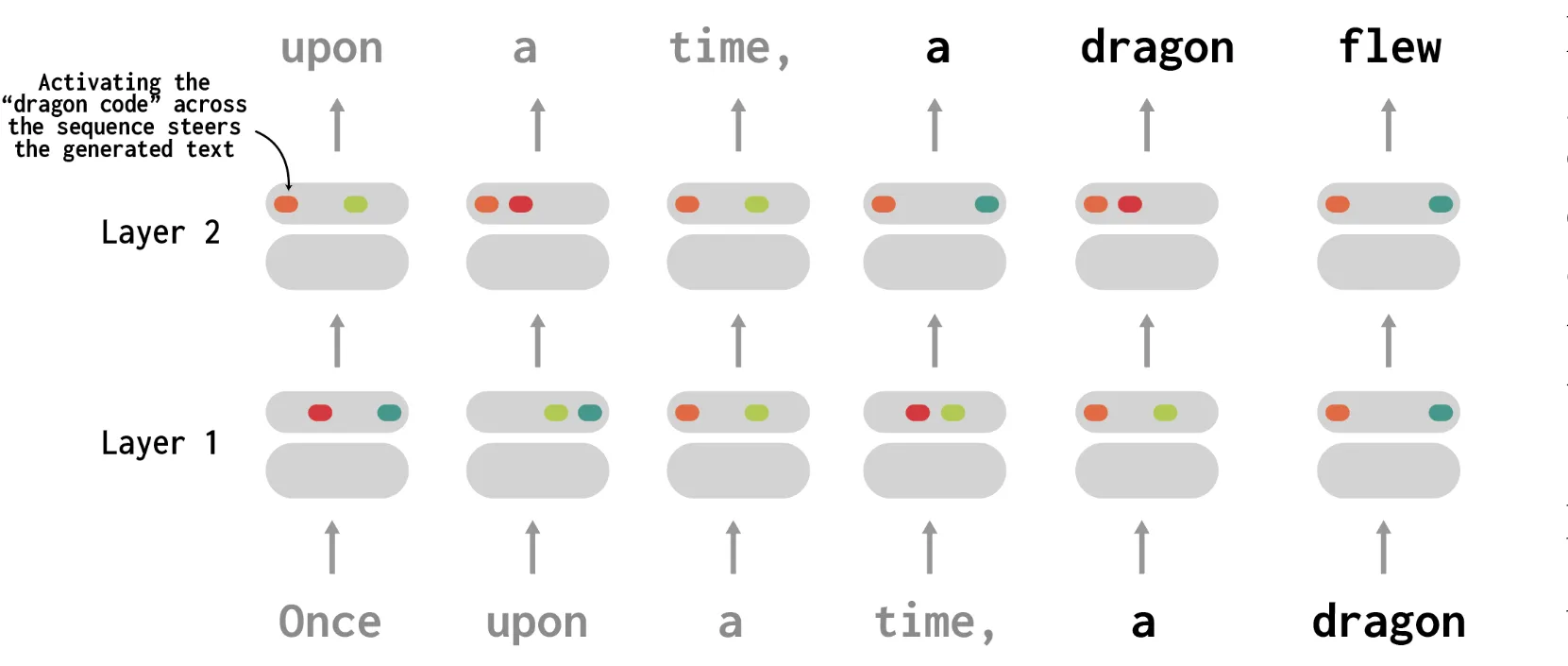

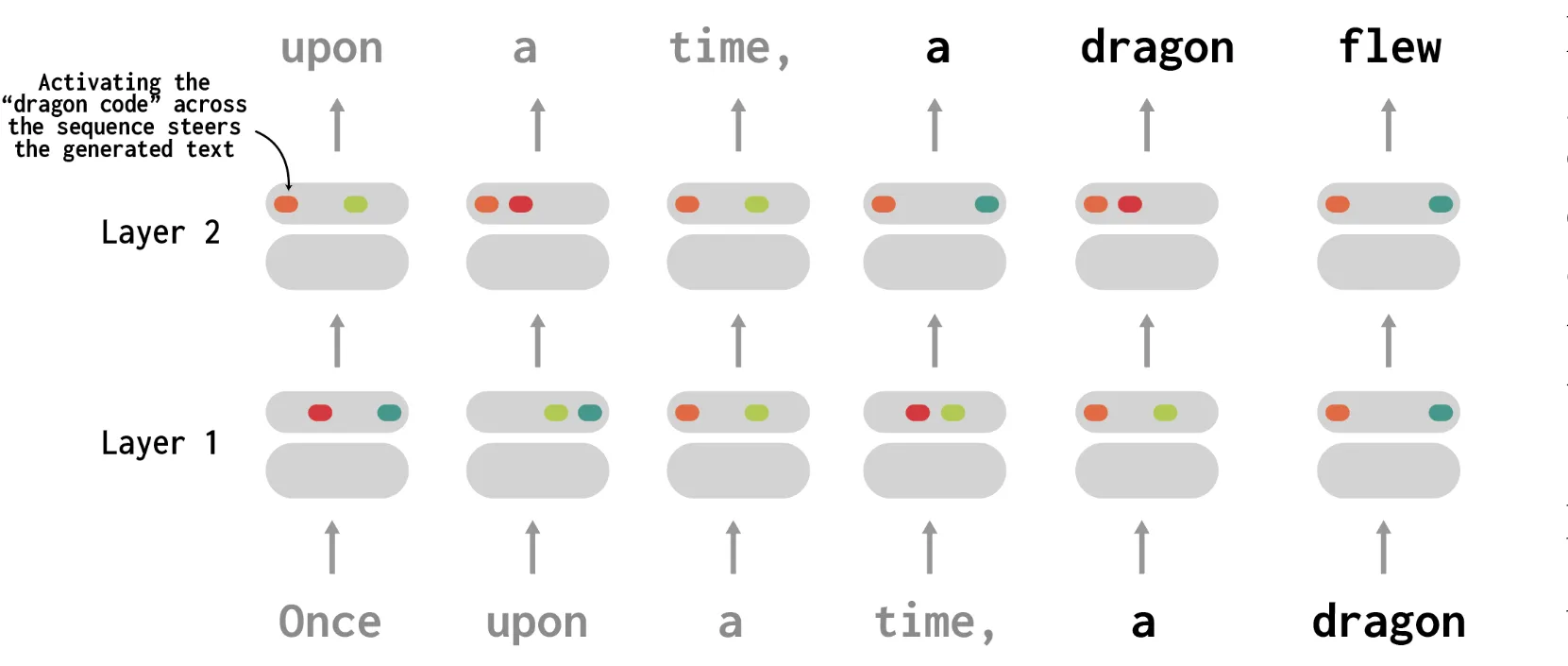

We applied our approach, codebook features, to language models up to 410M parameters. We found codes that activate on a wide range of concepts; spanning punctuation, syntax, lexical semantics, and high-level topics. In our experiments, codes were better predictors of simple textual features than neurons. They can also be used to steer behavior: directly activating the code for a given concept (say, dragon) causes the network to (most of the time) generate text about dragons.

Surprisingly, even when the quantization bottleneck shrinks the information content of an activation vector by a factor of more than 100, the next token prediction accuracy is usually reduced by less than 5%.

Our work is a promising foundation for the interpretability and control of neural networks: it should aid in discovering circuits across layers, more sophisticated control of model behaviors, and making larger-scale interpretability methods more tractable.

For more information, check out the full paper or play with our demo on HuggingFace. If you’re also interested in making AI systems interpretable, we’re hiring! Check out our roles at FAR.AI.

This is a div block with a Webflow interaction that will be triggered when the heading is in the view.

We found a way to modify neural networks to make their internals more interpretable and steerable while causing only a small degradation of performance. At each layer, we apply a quantization bottleneck that forces the activation vector into a sum of a few discrete codes; converting an inscrutable, dense, and continuous vector into a discrete list of codes from a learned codebook that are either on or off.

We applied our approach, codebook features, to language models up to 410M parameters. We found codes that activate on a wide range of concepts; spanning punctuation, syntax, lexical semantics, and high-level topics. In our experiments, codes were better predictors of simple textual features than neurons. They can also be used to steer behavior: directly activating the code for a given concept (say, dragon) causes the network to (most of the time) generate text about dragons.

Surprisingly, even when the quantization bottleneck shrinks the information content of an activation vector by a factor of more than 100, the next token prediction accuracy is usually reduced by less than 5%.

Our work is a promising foundation for the interpretability and control of neural networks: it should aid in discovering circuits across layers, more sophisticated control of model behaviors, and making larger-scale interpretability methods more tractable.

For more information, check out the full paper or play with our demo on HuggingFace. If you’re also interested in making AI systems interpretable, we’re hiring! Check out our roles at FAR.AI.