Scientists Call for Global AI Safety Preparedness to Avert Catastrophic Risks

September 16, 2024

Summary

FOR IMMEDIATE RELEASE

FAR.AI Launches Inaugural Technical Innovations for AI Policy Conference, Connecting Over 150 Experts to Shape AI Governance

WASHINGTON, D.C. — June 4, 2025 — FAR.AI successfully launched the inaugural Technical Innovations for AI Policy Conference, creating a vital bridge between cutting-edge AI research and actionable policy solutions. The two-day gathering (May 31–June 1) convened more than 150 technical experts, researchers, and policymakers to address the most pressing challenges at the intersection of AI technology and governance.

Organized in collaboration with the Foundation for American Innovation (FAI), the Center for a New American Security (CNAS), and the RAND Corporation, the conference tackled urgent challenges including semiconductor export controls, hardware-enabled governance mechanisms, AI safety evaluations, data center security, energy infrastructure, and national defense applications.

"I hope that today this divide can end, that we can bury the hatchet and forge a new alliance between innovation and American values, between acceleration and altruism that will shape not just our nation's fate but potentially the fate of humanity," said Mark Beall, President of the AI Policy Network, addressing the critical need for collaboration between Silicon Valley and Washington.

Keynote speakers included Congressman Bill Foster, Saif Khan (Institute for Progress), Helen Toner (CSET), Mark Beall (AI Policy Network), Brad Carson (Americans for Responsible Innovation), and Alex Bores (New York State Assembly). The diverse program featured over 20 speakers from leading institutions across government, academia, and industry.

Key themes emerged around the urgency of action, with speakers highlighting a critical 1,000-day window to establish effective governance frameworks. Concrete proposals included Congressman Foster's legislation mandating chip location-verification to prevent smuggling, the RAISE Act requiring safety plans and third-party audits for frontier AI companies, and strategies to secure the 80-100 gigawatts of additional power capacity needed for AI infrastructure.

FAR.AI will share recordings and materials from on-the-record sessions in the coming weeks. For more information and a complete speaker list, visit https://far.ai/events/event-list/technical-innovations-for-ai-policy-2025.

About FAR.AI

Founded in 2022, FAR.AI is an AI safety research nonprofit that facilitates breakthrough research, fosters coordinated global responses, and advances understanding of AI risks and solutions.

Media Contact: tech-policy-conf@far.ai

Leading global AI scientists convened in Venice for the third International Dialogue on AI Safety (IDAIS-Venice), hosted by the Safe AI Forum (a project of FAR.AI) in partnership with the Berggruen Institute. Attendees including Turing award winners Yoshua Bengio, Andrew Yao and Geoffrey Hinton called for emergency preparedness to avert catastrophic risks from advanced AI systems.

Venice, Italy - On September 6th-8th 2024, leading global AI scientists and policy experts convened in Venice for the third International Dialogue on AI Safety (IDAIS-Venice), hosted by the Safe AI Forum (SAIF), a project of FAR.AI, in collaboration with the Berggruen Institute. During the event, computer scientists including Turing Award winners Yoshua Bengio, Andrew Yao, and Geoffrey Hinton joined forces with governance experts such as Tsinghua professor Xue Lan and John Hopkins professor Gillian Hadfield to develop policy proposals for global AI safety.

The event took place over three days at the Casa dei Tre Oci in Venice, focusing on enforcement mechanisms for the AI development red lines outlined at the previous IDAIS-Beijing event. Participants worked to create concrete proposals to prevent these red lines from being breached and ensuring the safe development of advanced AI systems.

The discussion resulted in a consensus statement outlining three key proposals:

Emergency Preparedness: The expert participants underscored the need to be prepared for risks from advanced AI that may emerge at any time. Participants agreed that highly capable AI systems are likely to be developed in the coming decades, and could potentially be developed imminently. To address this urgent concern, they proposed international emergency preparedness agreements. Through these agreements, domestic AI safety authorities would convene, collaborate on, and commit to implementing model registration and disclosures, incident reporting, tripwires, and contingency plans. This proposal acknowledges the potential for significant risks from advanced AI to emerge rapidly and unexpectedly, necessitating a coordinated global response.

Safety Assurance: To ensure that the agreed upon red lines are not crossed, the statement advocates for a comprehensive safety assurance framework. Under this framework, domestic AI safety authorities should require developers to present high-confidence safety cases prior to deploying models whose capabilities exceed specified thresholds. Post-deployment monitoring should also be a key component of assurance for highly capable AI systems as they become more widely adopted. Importantly, these safety assurances should be subject to independent audits, adding an extra layer of scrutiny and accountability to the process.

Safety and Verification Research: The participants emphasized that the research community needs to develop techniques that would allow states to rigorously verify that AI safety-related claims made by developers, and potentially other states, are true and valid. To ensure the independence and credibility of this research, they stressed that it should be conducted globally and funded by a wide range of governments and philanthropists. This approach aims to create a robust, unbiased framework for assessing and validating AI safety measures on an international scale.

The first half of the event focused on developing the statement, which was then presented to policy experts, including former heads of state, who joined for the latter part of the program. Former Baidu president and current Tsinghua professor Ya-Qin Zhang reflected on the impact of IDAIS, stating, “IDAIS has played a pivotal role in understanding the key issues and advancing our understanding of extreme risks of frontier AI. The results and statements from IDAIS have been used as a critical and credible source of references for many governments to formulate their relevant policies and regulations, including China.”

About the International Dialogues on AI Safety

The International Dialogues on AI Safety is an initiative that brings together scientists from around the world to collaborate on mitigating the risks of artificial intelligence. This third event was held in partnership between the Berggruen Institute and the Safe AI Forum, a fiscally sponsored project of FAR.AI. Read more about IDAIS here.

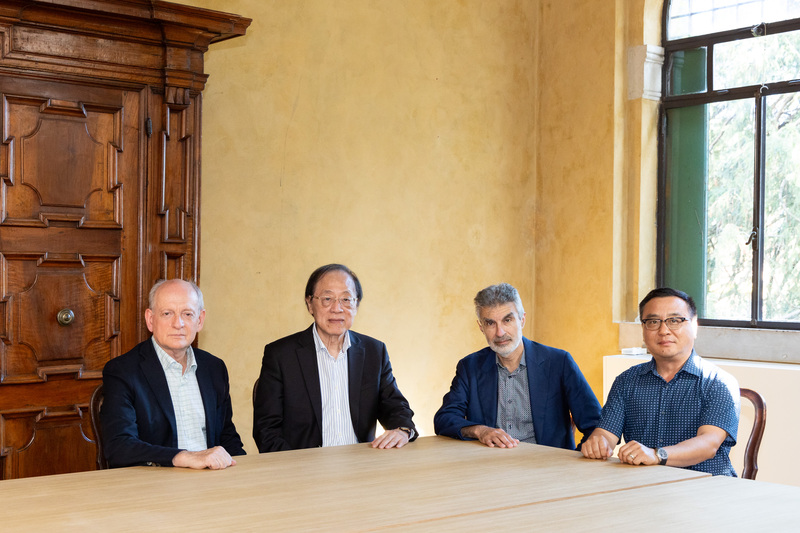

IDAIS-Venice was convened by (from left to right) Professors Stuart Russell, Andrew Yao, Yoshua Bengio and Ya-Qin Zhang.